内容

- 使用原始 Megatron-LM 训练 GPT-2

- 训练数据设置

- 运行未修改的 Megatron-LM GPT2 模型

- 启用 DeepSpeed

- 参数解析

- 初始化和训练

- 初始化

- 使用训练 API

- 前向传播

- 反向传播

- 更新模型参数

- 损失缩放

- 检查点保存和加载

- DeepSpeed 激活检查点(可选)

- 训练脚本

- 使用 GPT-2 的 DeepSpeed 评估

如果您还没有,我们建议您在逐步完成本教程之前先通读入门指南。

在本教程中,我们将向 Megatron-LM GPT2 模型添加 DeepSpeed,这是一个强大的大型变压器。Megatron-LM 支持模型并行和多节点训练。更多详情请参阅相应论文:Megatron-LM: Training Multi-Billion Parameter Language Models Using Model Parallelism。

首先,我们讨论数据和环境设置以及如何使用原始 Megatron-LM 训练 GPT-2 模型。接下来,我们将逐步使该模型能够与 DeepSpeed 一起运行。最后,我们展示了使用 DeepSpeed 带来的性能提升和内存占用减少。

使用原始 Megatron-LM 训练 GPT-2

我们已将原始模型代码从Megatron-LM复制到 DeepSpeed Megatron-LM中,并将其作为子模块提供。要下载,请执行:

<span style="background-color:#263238"><span style="color:#eeffff"><code>git submodule update <span style="color:#89ddff">--init</span> <span style="color:#89ddff">--recursive</span>

</code></span></span>训练数据设置

- 按照 Megatron 的说明下载

webtext数据并将符号链接置于DeepSpeedExamples/Megatron-LM/data:

运行未修改的 Megatron-LM GPT2 模型固定链接

- 对于单个 GPU 运行:

- 更改

scripts/pretrain_gpt2.sh,将其--train-data参数设置为"webtext"。 - 跑步

bash scripts/pretrain_gpt2.sh

- 更改

- 对于多个 GPU 和/或节点运行:

- 改变

scripts/pretrain_gpt2_model_parallel.sh- 将其

--train-data参数设置为"webtext" GPUS_PER_NODE表示测试中涉及的每个节点有多少 GPUNNODES指示有多少个节点参与测试

- 将其

- 跑步

bash scripts/pretrain_gpt2_model_parallel.sh

- 改变

启用 DeepSpeed固定链接

要使用 DeepSpeed,我们将修改三个文件:

arguments.py:参数配置pretrain_gpt2.py: 训练的主要切入点utils.py:检查点保存和加载实用程序

参数解析

第一步是将 DeepSpeed 参数添加到 Megatron-LM GPT2 模型,使用deepspeed.add_config_arguments()in arguments.py.

<span style="background-color:#263238"><span style="color:#eeffff"><code><span style="color:#c792ea">def</span> <span style="color:#82aaff">get_args</span><span style="color:#eeffff">():</span><span style="color:#c3e88d">"""Parse all the args."""</span><span style="color:#eeffff">parser</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">argparse</span><span style="color:#eeffff">.</span><span style="color:#eeffff">ArgumentParser</span><span style="color:#eeffff">(</span><span style="color:#eeffff">description</span><span style="color:#89ddff">=</span><span style="color:#c3e88d">'PyTorch BERT Model'</span><span style="color:#eeffff">)</span><span style="color:#eeffff">parser</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">add_model_config_args</span><span style="color:#eeffff">(</span><span style="color:#eeffff">parser</span><span style="color:#eeffff">)</span><span style="color:#eeffff">parser</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">add_fp16_config_args</span><span style="color:#eeffff">(</span><span style="color:#eeffff">parser</span><span style="color:#eeffff">)</span><span style="color:#eeffff">parser</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">add_training_args</span><span style="color:#eeffff">(</span><span style="color:#eeffff">parser</span><span style="color:#eeffff">)</span><span style="color:#eeffff">parser</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">add_evaluation_args</span><span style="color:#eeffff">(</span><span style="color:#eeffff">parser</span><span style="color:#eeffff">)</span><span style="color:#eeffff">parser</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">add_text_generate_args</span><span style="color:#eeffff">(</span><span style="color:#eeffff">parser</span><span style="color:#eeffff">)</span><span style="color:#eeffff">parser</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">add_data_args</span><span style="color:#eeffff">(</span><span style="color:#eeffff">parser</span><span style="color:#eeffff">)</span><span style="color:#b2ccd6"># Include DeepSpeed configuration arguments

</span> <span style="color:#eeffff">parser</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">deepspeed</span><span style="color:#eeffff">.</span><span style="color:#eeffff">add_config_arguments</span><span style="color:#eeffff">(</span><span style="color:#eeffff">parser</span><span style="color:#eeffff">)</span>

</code></span></span>初始化和训练固定链接

我们将修改pretrain.py以启用 DeepSpeed 训练。

初始化固定链接

我们用来deepspeed.initialize创建model_engine,optimizer和 LR scheduler。下面是它的定义:

<span style="background-color:#263238"><span style="color:#eeffff"><code><span style="color:#c792ea">def</span> <span style="color:#82aaff">initialize</span><span style="color:#eeffff">(</span><span style="color:#eeffff">args</span><span style="color:#eeffff">,</span><span style="color:#eeffff">model</span><span style="color:#eeffff">,</span><span style="color:#eeffff">optimizer</span><span style="color:#89ddff">=</span><span style="color:#eeffff">None</span><span style="color:#eeffff">,</span><span style="color:#eeffff">model_parameters</span><span style="color:#89ddff">=</span><span style="color:#eeffff">None</span><span style="color:#eeffff">,</span><span style="color:#eeffff">training_data</span><span style="color:#89ddff">=</span><span style="color:#eeffff">None</span><span style="color:#eeffff">,</span><span style="color:#eeffff">lr_scheduler</span><span style="color:#89ddff">=</span><span style="color:#eeffff">None</span><span style="color:#eeffff">,</span><span style="color:#eeffff">mpu</span><span style="color:#89ddff">=</span><span style="color:#eeffff">None</span><span style="color:#eeffff">,</span><span style="color:#eeffff">dist_init_required</span><span style="color:#89ddff">=</span><span style="color:#eeffff">True</span><span style="color:#eeffff">,</span><span style="color:#eeffff">collate_fn</span><span style="color:#89ddff">=</span><span style="color:#eeffff">None</span><span style="color:#eeffff">):</span>

</code></span></span>对于 Megatron-LM GPT2 模型,我们在其setup_model_and_optimizer()函数中初始化 DeepSpeed,如下所示,以传递原始的model, optimizer, args,lr_scheduler和mpu.

<span style="background-color:#263238"><span style="color:#eeffff"><code><span style="color:#c792ea">def</span> <span style="color:#82aaff">setup_model_and_optimizer</span><span style="color:#eeffff">(</span><span style="color:#eeffff">args</span><span style="color:#eeffff">):</span><span style="color:#c3e88d">"""Setup model and optimizer."""</span><span style="color:#eeffff">model</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">get_model</span><span style="color:#eeffff">(</span><span style="color:#eeffff">args</span><span style="color:#eeffff">)</span><span style="color:#eeffff">optimizer</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">get_optimizer</span><span style="color:#eeffff">(</span><span style="color:#eeffff">model</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">)</span><span style="color:#eeffff">lr_scheduler</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">get_learning_rate_scheduler</span><span style="color:#eeffff">(</span><span style="color:#eeffff">optimizer</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">)</span><span style="color:#c792ea">if</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">deepspeed</span><span style="color:#eeffff">:</span><span style="color:#89ddff">import</span> <span style="color:#ffcb6b">deepspeed</span><span style="color:#eeffff">print_rank_0</span><span style="color:#eeffff">(</span><span style="color:#c3e88d">"DeepSpeed is enabled."</span><span style="color:#eeffff">)</span><span style="color:#eeffff">model</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">optimizer</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">_</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">lr_scheduler</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">deepspeed</span><span style="color:#eeffff">.</span><span style="color:#eeffff">initialize</span><span style="color:#eeffff">(</span><span style="color:#eeffff">model</span><span style="color:#89ddff">=</span><span style="color:#eeffff">model</span><span style="color:#eeffff">,</span><span style="color:#eeffff">optimizer</span><span style="color:#89ddff">=</span><span style="color:#eeffff">optimizer</span><span style="color:#eeffff">,</span><span style="color:#eeffff">args</span><span style="color:#89ddff">=</span><span style="color:#eeffff">args</span><span style="color:#eeffff">,</span><span style="color:#eeffff">lr_scheduler</span><span style="color:#89ddff">=</span><span style="color:#eeffff">lr_scheduler</span><span style="color:#eeffff">,</span><span style="color:#eeffff">mpu</span><span style="color:#89ddff">=</span><span style="color:#eeffff">mpu</span><span style="color:#eeffff">,</span><span style="color:#eeffff">dist_init_required</span><span style="color:#89ddff">=</span><span style="color:#eeffff">False</span><span style="color:#eeffff">)</span>

</code></span></span>请注意,启用 FP16 后,Megatron-LM GPT2 会向Adam优化器添加一个包装器。DeepSpeed 有自己的 FP16 优化器,所以我们需要Adam直接将优化器传递给 DeepSpeed,而不需要任何包装器。get_optimizer()我们从启用 DeepSpeed 时返回展开的 Adam 优化器。

<span style="background-color:#263238"><span style="color:#eeffff"><code><span style="color:#c792ea">def</span> <span style="color:#82aaff">get_optimizer</span><span style="color:#eeffff">(</span><span style="color:#eeffff">model</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">):</span><span style="color:#c3e88d">"""Setup the optimizer."""</span><span style="color:#eeffff">......</span><span style="color:#b2ccd6"># Use Adam.

</span> <span style="color:#eeffff">optimizer</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">Adam</span><span style="color:#eeffff">(</span><span style="color:#eeffff">param_groups</span><span style="color:#eeffff">,</span><span style="color:#eeffff">lr</span><span style="color:#89ddff">=</span><span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">lr</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">weight_decay</span><span style="color:#89ddff">=</span><span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">weight_decay</span><span style="color:#eeffff">)</span><span style="color:#c792ea">if</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">deepspeed</span><span style="color:#eeffff">:</span><span style="color:#b2ccd6"># fp16 wrapper is not required for DeepSpeed.

</span> <span style="color:#c792ea">return</span> <span style="color:#eeffff">optimizer</span>

</code></span></span>使用训练 API固定链接

返回model的deepspeed.initialize是DeepSpeed 模型引擎,我们将使用它来使用前向、后向和步进 API 训练模型。

前向传播固定链接

前向传播 API 与 PyTorch 兼容,无需更改。

反向传播固定链接

反向传播是通过backward(loss)直接调用模型引擎来完成的。

<span style="background-color:#263238"><span style="color:#eeffff"><code> <span style="color:#c792ea">def</span> <span style="color:#82aaff">backward_step</span><span style="color:#eeffff">(</span><span style="color:#eeffff">optimizer</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">model</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">lm_loss</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">timers</span><span style="color:#eeffff">):</span><span style="color:#c3e88d">"""Backward step."""</span><span style="color:#b2ccd6"># Total loss.

</span> <span style="color:#eeffff">loss</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">lm_loss</span><span style="color:#b2ccd6"># Backward pass.

</span> <span style="color:#c792ea">if</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">deepspeed</span><span style="color:#eeffff">:</span><span style="color:#eeffff">model</span><span style="color:#eeffff">.</span><span style="color:#eeffff">backward</span><span style="color:#eeffff">(</span><span style="color:#eeffff">loss</span><span style="color:#eeffff">)</span><span style="color:#c792ea">else</span><span style="color:#eeffff">:</span><span style="color:#eeffff">optimizer</span><span style="color:#eeffff">.</span><span style="color:#eeffff">zero_grad</span><span style="color:#eeffff">()</span><span style="color:#c792ea">if</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">fp16</span><span style="color:#eeffff">:</span><span style="color:#eeffff">optimizer</span><span style="color:#eeffff">.</span><span style="color:#eeffff">backward</span><span style="color:#eeffff">(</span><span style="color:#eeffff">loss</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">update_master_grads</span><span style="color:#89ddff">=</span><span style="color:#eeffff">False</span><span style="color:#eeffff">)</span><span style="color:#c792ea">else</span><span style="color:#eeffff">:</span><span style="color:#eeffff">loss</span><span style="color:#eeffff">.</span><span style="color:#eeffff">backward</span><span style="color:#eeffff">()</span>

</code></span></span>在使用小批量更新权重后,DeepSpeed 会自动处理梯度归零。

此外,DeepSpeed 解决了分布式数据并行和 FP16 问题,在多个地方简化了代码。

(A) DeepSpeed 还在梯度累积边界处自动执行梯度平均。所以我们跳过 allreduce 通信。

<span style="background-color:#263238"><span style="color:#eeffff"><code> <span style="color:#c792ea">if</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">deepspeed</span><span style="color:#eeffff">:</span><span style="color:#b2ccd6"># DeepSpeed backward propagation already addressed all reduce communication.

</span> <span style="color:#b2ccd6"># Reset the timer to avoid breaking timer logs below.

</span> <span style="color:#eeffff">timers</span><span style="color:#eeffff">(</span><span style="color:#c3e88d">'allreduce'</span><span style="color:#eeffff">).</span><span style="color:#eeffff">reset</span><span style="color:#eeffff">()</span><span style="color:#c792ea">else</span><span style="color:#eeffff">:</span><span style="color:#eeffff">torch</span><span style="color:#eeffff">.</span><span style="color:#eeffff">distributed</span><span style="color:#eeffff">.</span><span style="color:#eeffff">all_reduce</span><span style="color:#eeffff">(</span><span style="color:#eeffff">reduced_losses</span><span style="color:#eeffff">.</span><span style="color:#eeffff">data</span><span style="color:#eeffff">)</span><span style="color:#eeffff">reduced_losses</span><span style="color:#eeffff">.</span><span style="color:#eeffff">data</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">reduced_losses</span><span style="color:#eeffff">.</span><span style="color:#eeffff">data</span> <span style="color:#89ddff">/</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">world_size</span><span style="color:#c792ea">if</span> <span style="color:#89ddff">not</span> <span style="color:#eeffff">USE_TORCH_DDP</span><span style="color:#eeffff">:</span><span style="color:#eeffff">timers</span><span style="color:#eeffff">(</span><span style="color:#c3e88d">'allreduce'</span><span style="color:#eeffff">).</span><span style="color:#eeffff">start</span><span style="color:#eeffff">()</span><span style="color:#eeffff">model</span><span style="color:#eeffff">.</span><span style="color:#eeffff">allreduce_params</span><span style="color:#eeffff">(</span><span style="color:#eeffff">reduce_after</span><span style="color:#89ddff">=</span><span style="color:#eeffff">False</span><span style="color:#eeffff">,</span><span style="color:#eeffff">fp32_allreduce</span><span style="color:#89ddff">=</span><span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">fp32_allreduce</span><span style="color:#eeffff">)</span><span style="color:#eeffff">timers</span><span style="color:#eeffff">(</span><span style="color:#c3e88d">'allreduce'</span><span style="color:#eeffff">).</span><span style="color:#eeffff">stop</span><span style="color:#eeffff">()</span></code></span></span>(B) 我们也跳过更新主梯度,因为 DeepSpeed 在内部解决了它。

<span style="background-color:#263238"><span style="color:#eeffff"><code> <span style="color:#b2ccd6"># Update master gradients.

</span> <span style="color:#c792ea">if</span> <span style="color:#89ddff">not</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">deepspeed</span><span style="color:#eeffff">:</span><span style="color:#c792ea">if</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">fp16</span><span style="color:#eeffff">:</span><span style="color:#eeffff">optimizer</span><span style="color:#eeffff">.</span><span style="color:#eeffff">update_master_grads</span><span style="color:#eeffff">()</span><span style="color:#b2ccd6"># Clipping gradients helps prevent the exploding gradient.

</span> <span style="color:#c792ea">if</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">clip_grad</span> <span style="color:#89ddff">></span> <span style="color:#f78c6c">0</span><span style="color:#eeffff">:</span><span style="color:#c792ea">if</span> <span style="color:#89ddff">not</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">fp16</span><span style="color:#eeffff">:</span><span style="color:#eeffff">mpu</span><span style="color:#eeffff">.</span><span style="color:#eeffff">clip_grad_norm</span><span style="color:#eeffff">(</span><span style="color:#eeffff">model</span><span style="color:#eeffff">.</span><span style="color:#eeffff">parameters</span><span style="color:#eeffff">(),</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">clip_grad</span><span style="color:#eeffff">)</span><span style="color:#c792ea">else</span><span style="color:#eeffff">:</span><span style="color:#eeffff">optimizer</span><span style="color:#eeffff">.</span><span style="color:#eeffff">clip_master_grads</span><span style="color:#eeffff">(</span><span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">clip_grad</span><span style="color:#eeffff">)</span><span style="color:#c792ea">return</span> <span style="color:#eeffff">lm_loss_reduced</span></code></span></span>更新模型参数

DeepSpeed 引擎中的函数step()更新模型参数以及学习率。

<span style="background-color:#263238"><span style="color:#eeffff"><code> <span style="color:#c792ea">if</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">deepspeed</span><span style="color:#eeffff">:</span><span style="color:#eeffff">model</span><span style="color:#eeffff">.</span><span style="color:#eeffff">step</span><span style="color:#eeffff">()</span><span style="color:#c792ea">else</span><span style="color:#eeffff">:</span><span style="color:#eeffff">optimizer</span><span style="color:#eeffff">.</span><span style="color:#eeffff">step</span><span style="color:#eeffff">()</span><span style="color:#b2ccd6"># Update learning rate.

</span> <span style="color:#c792ea">if</span> <span style="color:#89ddff">not</span> <span style="color:#eeffff">(</span><span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">fp16</span> <span style="color:#89ddff">and</span> <span style="color:#eeffff">optimizer</span><span style="color:#eeffff">.</span><span style="color:#eeffff">overflow</span><span style="color:#eeffff">):</span><span style="color:#eeffff">lr_scheduler</span><span style="color:#eeffff">.</span><span style="color:#eeffff">step</span><span style="color:#eeffff">()</span><span style="color:#c792ea">else</span><span style="color:#eeffff">:</span><span style="color:#eeffff">skipped_iter</span> <span style="color:#89ddff">=</span> <span style="color:#f78c6c">1</span></code></span></span>损失缩放

GPT2 训练脚本记录训练期间的损失缩放值。在 DeepSpeed 优化器内部,此值存储为 ascur_scale而不是loss_scaleMegatron 优化器中的 as。因此,我们在日志字符串中适当地替换它。

<span style="background-color:#263238"><span style="color:#eeffff"><code> <span style="color:#c792ea">if</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">fp16</span><span style="color:#eeffff">:</span><span style="color:#eeffff">log_string</span> <span style="color:#89ddff">+=</span> <span style="color:#c3e88d">' loss scale {:.1f} |'</span><span style="color:#eeffff">.</span><span style="color:#eeffff">format</span><span style="color:#eeffff">(</span><span style="color:#eeffff">optimizer</span><span style="color:#eeffff">.</span><span style="color:#eeffff">cur_scale</span> <span style="color:#c792ea">if</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">deepspeed</span> <span style="color:#c792ea">else</span> <span style="color:#eeffff">optimizer</span><span style="color:#eeffff">.</span><span style="color:#eeffff">loss_scale</span><span style="color:#eeffff">)</span></code></span></span>检查点保存和加载

DeepSpeed 引擎具有用于检查点保存和加载的灵活 API,以处理来自客户端模型及其内部的状态。

<span style="background-color:#263238"><span style="color:#eeffff"><code><span style="color:#c792ea">def</span> <span style="color:#82aaff">save_checkpoint</span><span style="color:#eeffff">(</span><span style="color:#eeffff">self</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">save_dir</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">tag</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">client_state</span><span style="color:#89ddff">=</span><span style="color:#eeffff">{})</span>

<span style="color:#c792ea">def</span> <span style="color:#82aaff">load_checkpoint</span><span style="color:#eeffff">(</span><span style="color:#eeffff">self</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">load_dir</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">tag</span><span style="color:#eeffff">)</span>

</code></span></span>要使用 DeepSpeed,我们需要更新utils.pyMegatron-LM GPT2 保存和加载检查点的位置。

创建一个新函数save_ds_checkpoint(),如下所示。新函数收集客户端模型状态并通过调用 DeepSpeed 的save_checkpoint().

<span style="background-color:#263238"><span style="color:#eeffff"><code> <span style="color:#c792ea">def</span> <span style="color:#82aaff">save_ds_checkpoint</span><span style="color:#eeffff">(</span><span style="color:#eeffff">iteration</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">model</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">):</span><span style="color:#c3e88d">"""Save a model checkpoint."""</span><span style="color:#eeffff">sd</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">{}</span><span style="color:#eeffff">sd</span><span style="color:#eeffff">[</span><span style="color:#c3e88d">'iteration'</span><span style="color:#eeffff">]</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">iteration</span><span style="color:#b2ccd6"># rng states.

</span> <span style="color:#c792ea">if</span> <span style="color:#89ddff">not</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">no_save_rng</span><span style="color:#eeffff">:</span><span style="color:#eeffff">sd</span><span style="color:#eeffff">[</span><span style="color:#c3e88d">'random_rng_state'</span><span style="color:#eeffff">]</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">random</span><span style="color:#eeffff">.</span><span style="color:#eeffff">getstate</span><span style="color:#eeffff">()</span><span style="color:#eeffff">sd</span><span style="color:#eeffff">[</span><span style="color:#c3e88d">'np_rng_state'</span><span style="color:#eeffff">]</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">np</span><span style="color:#eeffff">.</span><span style="color:#eeffff">random</span><span style="color:#eeffff">.</span><span style="color:#eeffff">get_state</span><span style="color:#eeffff">()</span><span style="color:#eeffff">sd</span><span style="color:#eeffff">[</span><span style="color:#c3e88d">'torch_rng_state'</span><span style="color:#eeffff">]</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">torch</span><span style="color:#eeffff">.</span><span style="color:#eeffff">get_rng_state</span><span style="color:#eeffff">()</span><span style="color:#eeffff">sd</span><span style="color:#eeffff">[</span><span style="color:#c3e88d">'cuda_rng_state'</span><span style="color:#eeffff">]</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">get_accelerator</span><span style="color:#eeffff">().</span><span style="color:#eeffff">get_rng_state</span><span style="color:#eeffff">()</span><span style="color:#eeffff">sd</span><span style="color:#eeffff">[</span><span style="color:#c3e88d">'rng_tracker_states'</span><span style="color:#eeffff">]</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">mpu</span><span style="color:#eeffff">.</span><span style="color:#eeffff">get_cuda_rng_tracker</span><span style="color:#eeffff">().</span><span style="color:#eeffff">get_states</span><span style="color:#eeffff">()</span><span style="color:#eeffff">model</span><span style="color:#eeffff">.</span><span style="color:#eeffff">save_checkpoint</span><span style="color:#eeffff">(</span><span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">save</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">iteration</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">client_state</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">sd</span><span style="color:#eeffff">)</span></code></span></span>在 Megatron-LM GPT2 的save_checkpoint()函数中,添加以下行来为 DeepSpeed 调用上述函数。

<span style="background-color:#263238"><span style="color:#eeffff"><code> <span style="color:#c792ea">def</span> <span style="color:#82aaff">save_checkpoint</span><span style="color:#eeffff">(</span><span style="color:#eeffff">iteration</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">model</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">optimizer</span><span style="color:#eeffff">,</span><span style="color:#eeffff">lr_scheduler</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">):</span><span style="color:#c3e88d">"""Save a model checkpoint."""</span><span style="color:#c792ea">if</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">deepspeed</span><span style="color:#eeffff">:</span><span style="color:#eeffff">save_ds_checkpoint</span><span style="color:#eeffff">(</span><span style="color:#eeffff">iteration</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">model</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">)</span><span style="color:#c792ea">else</span><span style="color:#eeffff">:</span><span style="color:#eeffff">......</span></code></span></span>在load_checkpoint()函数中,使用如下所示的 DeepSpeed 检查点加载 API,并返回客户端模型的状态。

<span style="background-color:#263238"><span style="color:#eeffff"><code> <span style="color:#c792ea">def</span> <span style="color:#82aaff">load_checkpoint</span><span style="color:#eeffff">(</span><span style="color:#eeffff">model</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">optimizer</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">lr_scheduler</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">):</span><span style="color:#c3e88d">"""Load a model checkpoint."""</span><span style="color:#eeffff">iteration</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">release</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">get_checkpoint_iteration</span><span style="color:#eeffff">(</span><span style="color:#eeffff">args</span><span style="color:#eeffff">)</span><span style="color:#c792ea">if</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">deepspeed</span><span style="color:#eeffff">:</span><span style="color:#eeffff">checkpoint_name</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">sd</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">model</span><span style="color:#eeffff">.</span><span style="color:#eeffff">load_checkpoint</span><span style="color:#eeffff">(</span><span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">load</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">iteration</span><span style="color:#eeffff">)</span><span style="color:#c792ea">if</span> <span style="color:#eeffff">checkpoint_name</span> <span style="color:#89ddff">is</span> <span style="color:#eeffff">None</span><span style="color:#eeffff">:</span><span style="color:#c792ea">if</span> <span style="color:#eeffff">mpu</span><span style="color:#eeffff">.</span><span style="color:#eeffff">get_data_parallel_rank</span><span style="color:#eeffff">()</span> <span style="color:#89ddff">==</span> <span style="color:#f78c6c">0</span><span style="color:#eeffff">:</span><span style="color:#c792ea">print</span><span style="color:#eeffff">(</span><span style="color:#c3e88d">"Unable to load checkpoint."</span><span style="color:#eeffff">)</span><span style="color:#c792ea">return</span> <span style="color:#eeffff">iteration</span><span style="color:#c792ea">else</span><span style="color:#eeffff">:</span><span style="color:#eeffff">......</span></code></span></span>DeepSpeed 激活检查点(可选)固定链接

DeepSpeed 可以通过跨模型并行 GPU 划分激活检查点或将它们卸载到 CPU 来减少模型并行训练期间的激活内存。这些优化是可选的,可以跳过,除非激活内存成为瓶颈。为了启用分区激活,我们使用deepspeed.checkpointingAPI 来替换 Megatron 的激活检查点和随机状态跟踪器 API。替换应该在第一次调用这些 API 之前发生。

a) 替换为pretrain_gpt.py:

<span style="background-color:#263238"><span style="color:#eeffff"><code> <span style="color:#b2ccd6"># Optional DeepSpeed Activation Checkpointing Features

</span> <span style="color:#b2ccd6">#

</span> <span style="color:#c792ea">if</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">deepspeed</span> <span style="color:#89ddff">and</span> <span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">deepspeed_activation_checkpointing</span><span style="color:#eeffff">:</span><span style="color:#eeffff">set_deepspeed_activation_checkpointing</span><span style="color:#eeffff">(</span><span style="color:#eeffff">args</span><span style="color:#eeffff">)</span><span style="color:#c792ea">def</span> <span style="color:#82aaff">set_deepspeed_activation_checkpointing</span><span style="color:#eeffff">(</span><span style="color:#eeffff">args</span><span style="color:#eeffff">):</span><span style="color:#eeffff">deepspeed</span><span style="color:#eeffff">.</span><span style="color:#eeffff">checkpointing</span><span style="color:#eeffff">.</span><span style="color:#eeffff">configure</span><span style="color:#eeffff">(</span><span style="color:#eeffff">mpu</span><span style="color:#eeffff">,</span><span style="color:#eeffff">deepspeed_config</span><span style="color:#89ddff">=</span><span style="color:#eeffff">args</span><span style="color:#eeffff">.</span><span style="color:#eeffff">deepspeed_config</span><span style="color:#eeffff">,</span><span style="color:#eeffff">partition_activation</span><span style="color:#89ddff">=</span><span style="color:#eeffff">True</span><span style="color:#eeffff">)</span><span style="color:#eeffff">mpu</span><span style="color:#eeffff">.</span><span style="color:#eeffff">checkpoint</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">deepspeed</span><span style="color:#eeffff">.</span><span style="color:#eeffff">checkpointing</span><span style="color:#eeffff">.</span><span style="color:#eeffff">checkpoint</span><span style="color:#eeffff">mpu</span><span style="color:#eeffff">.</span><span style="color:#eeffff">get_cuda_rng_tracker</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">deepspeed</span><span style="color:#eeffff">.</span><span style="color:#eeffff">checkpointing</span><span style="color:#eeffff">.</span><span style="color:#eeffff">get_cuda_rng_tracker</span><span style="color:#eeffff">mpu</span><span style="color:#eeffff">.</span><span style="color:#eeffff">model_parallel_cuda_manual_seed</span> <span style="color:#89ddff">=</span><span style="color:#eeffff">deepspeed</span><span style="color:#eeffff">.</span><span style="color:#eeffff">checkpointing</span><span style="color:#eeffff">.</span><span style="color:#eeffff">model_parallel_cuda_manual_seed</span>

</code></span></span>b) 替换为mpu/transformer.py:

<span style="background-color:#263238"><span style="color:#eeffff"><code><span style="color:#c792ea">if</span> <span style="color:#eeffff">deepspeed</span><span style="color:#eeffff">.</span><span style="color:#eeffff">checkpointing</span><span style="color:#eeffff">.</span><span style="color:#eeffff">is_configured</span><span style="color:#eeffff">():</span><span style="color:#c792ea">global</span> <span style="color:#eeffff">get_cuda_rng_tracker</span><span style="color:#eeffff">,</span> <span style="color:#eeffff">checkpoint</span><span style="color:#eeffff">get_cuda_rng_tracker</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">deepspeed</span><span style="color:#eeffff">.</span><span style="color:#eeffff">checkpoint</span><span style="color:#eeffff">.</span><span style="color:#eeffff">get_cuda_rng_tracker</span><span style="color:#eeffff">checkpoint</span> <span style="color:#89ddff">=</span> <span style="color:#eeffff">deepspeed</span><span style="color:#eeffff">.</span><span style="color:#eeffff">checkpointing</span><span style="color:#eeffff">.</span><span style="color:#eeffff">checkpoint</span></code></span></span>deepspeed.checkpointing.configure通过这些替换,可以在文件中或在文件中指定各种 DeepSpeed 激活检查点优化,例如激活分区、连续检查点和 CPU 检查点deepspeed_config。

训练脚本固定链接

我们假设webtext数据已在上一步中准备好。要开始训练应用了 DeepSpeed 的 Megatron-LM GPT2 模型,请执行以下命令开始训练。

- 单 GPU 运行

- 跑步

bash scripts/ds_pretrain_gpt2.sh

- 跑步

- 多个 GPU/节点运行

- 跑步

bash scripts/ds_zero2_pretrain_gpt2_model_parallel.sh

- 跑步

使用 GPT-2 的 DeepSpeed 评估固定链接

DeepSpeed 可以通过先进的ZeRO 优化器有效地训练非常大的模型。2020 年 2 月,我们在 DeepSpeed 中发布了 ZeRO 的优化子集,执行优化器状态分区。我们将它们称为 ZeRO-1。2020 年 5 月,我们扩展了 DeepSpeed 中的 ZeRO-1,以包括来自 ZeRO 的额外优化,包括梯度和激活分区,以及连续内存优化。我们将此版本称为 ZeRO-2。

ZeRO-2 显着减少了训练大型模型的内存占用,这意味着大型模型可以在 i) 较少的模型并行度和 ii) 较大的批量大小下进行训练。较低的模型并行度通过增加计算的粒度来提高训练效率,例如矩阵乘法,其中性能与矩阵的大小直接相关。此外,较少的模型并行性还会导致模型并行 GPU 之间的通信减少,从而进一步提高性能。较大的批量大小具有增加计算粒度和减少通信的类似效果,也会带来更好的性能。因此,通过将 DeepSpeed 和 ZeRO-2 集成到威震天中,与单独使用威震天相比,我们将模型规模和速度提升到了一个全新的水平。

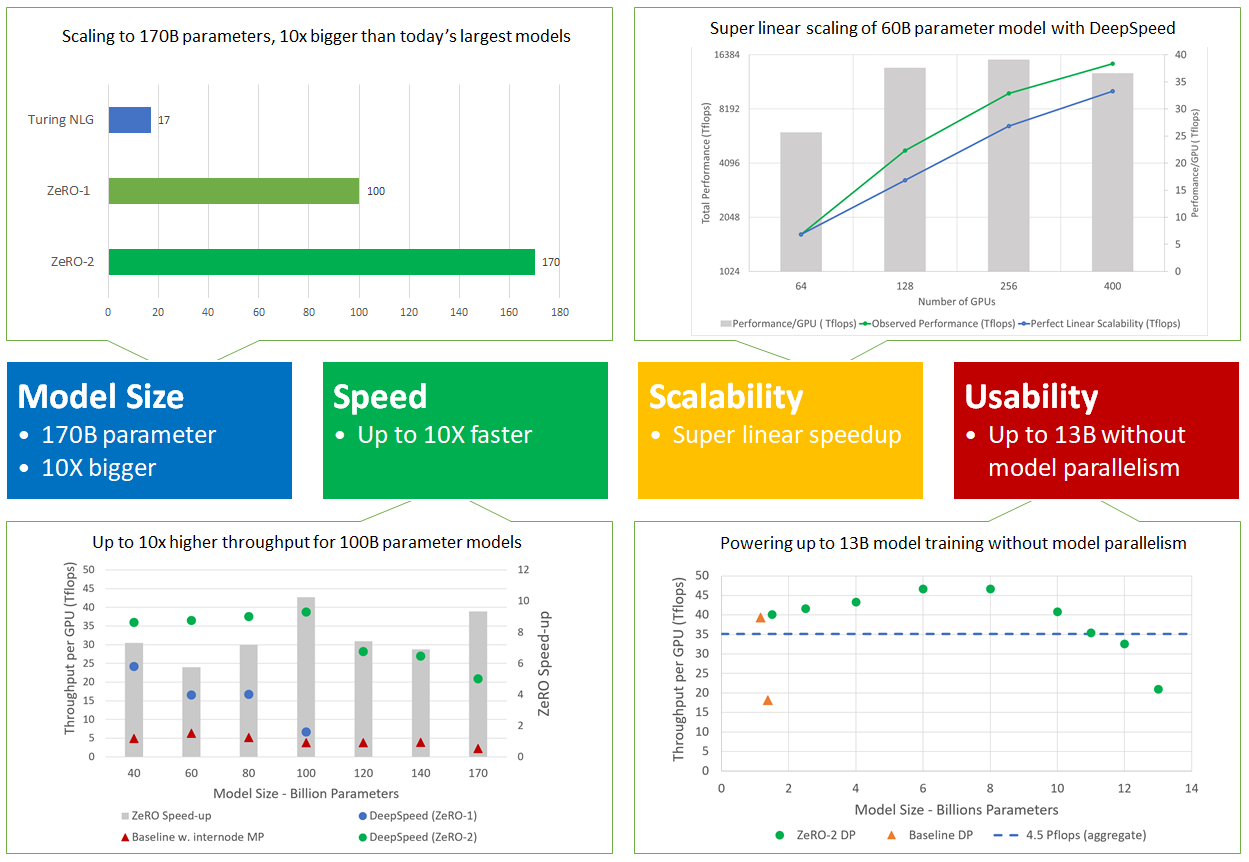

图 2:ZeRO-2 可扩展到 1700 亿个参数,具有高达 10 倍的吞吐量,获得超线性加速,并通过避免对多达 130 亿个参数的模型进行代码重构来提高可用性。

更具体地说,DeepSpeed 和 ZeRO-2 在四个方面表现出色(如图 2 所示),支持数量级更大的模型,速度提高 10 倍,具有超线性可扩展性,并提高了使大型模型训练民主化的可用性。下面就这四个方面进行详细介绍。

模型大小:最先进的大型模型,如 OpenAI GPT-2、NVIDIA Megatron-LM、Google T5 和 Microsoft Turing-NLG,其参数大小分别为 1.5B、8.3B、11B 和 17B。ZeRO-2 提供系统支持以高效运行 1700 亿个参数的模型,比这些最大的模型大一个数量级(图 2,左上角)。

速度:提高内存效率可以提高吞吐量和加快训练速度。图 2(左下)显示了 ZeRO-2 和 ZeRO-1 的系统吞吐量(两者都结合了 ZeRO 支持的数据并行性和 NVIDIA Megatron-LM 模型并行性)以及使用最先进的模型并行性方法 Megatron- LM 单独(图 2 中的基线,左下角)。ZeRO-2 在 400 个 NVIDIA V100 GPU 集群上运行 1000 亿个参数模型,每个 GPU 超过 38 teraflops,聚合性能超过 15 petaflops。对于相同大小的模型,ZeRO-2 的训练速度比单独使用 Megatron-LM 快 10 倍,比 ZeRO-1 快 5 倍。

可扩展性:我们观察到超线性加速(图 2,右上角),当 GPU 数量增加一倍时,性能会增加一倍以上。随着我们提高数据并行度,ZeRO-2 减少了模型状态的内存占用,使我们能够适应每个 GPU 更大的批处理大小,从而获得更好的性能。

大众化大型模型训练:ZeRO-2 使模型科学家能够有效地训练多达 130 亿个参数的模型,而无需任何通常需要模型重构的模型并行性(图 2,右下角)。130 亿个参数比大多数最先进的模型(例如 Google T5,有 110 亿个参数)都要大。因此,模型科学家可以自由地试验大型模型,而不必担心模型并行性。相比之下,经典数据并行方法(例如 PyTorch 分布式数据并行)的实现在 14 亿参数模型下会耗尽内存,而 ZeRO-1 支持多达 60 亿参数进行比较。

此外,在没有模型并行性的情况下,这些模型可以在低带宽集群上进行训练,同时与使用模型并行性相比仍然可以获得明显更好的吞吐量。例如,与在通过 40 Gbps Infiniband 互连连接的四节点集群上使用模型并行相比,使用 ZeRO 驱动的数据并行可以训练 GPT-2 模型快近 4 倍,其中每个节点有四个 NVIDIA 16GB V100 GPU 与 PCI-E 连接. 因此,随着这种性能提升,大型模型训练不再局限于具有超快速互连的 GPU 集群,也可以在带宽有限的适度集群上进行。