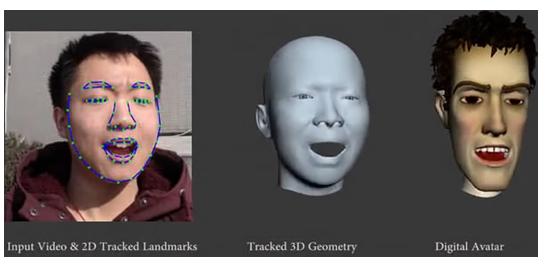

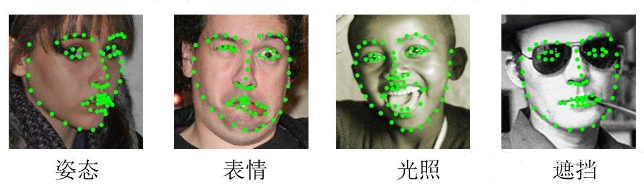

dlib人脸配准有两种方式。

- 一种是使用 get_face_chip()方法,使用5个关键点模型来进行配准,这种方法Dlib已经提供了完整的接口(imutils里也有类似函数, face_utils.FaceAligner,代码放在最后面)

- 另一种是自己使用68点关键点模型,根据关键点信息求解变换矩阵,然后把变换矩阵应用到整个图像上。

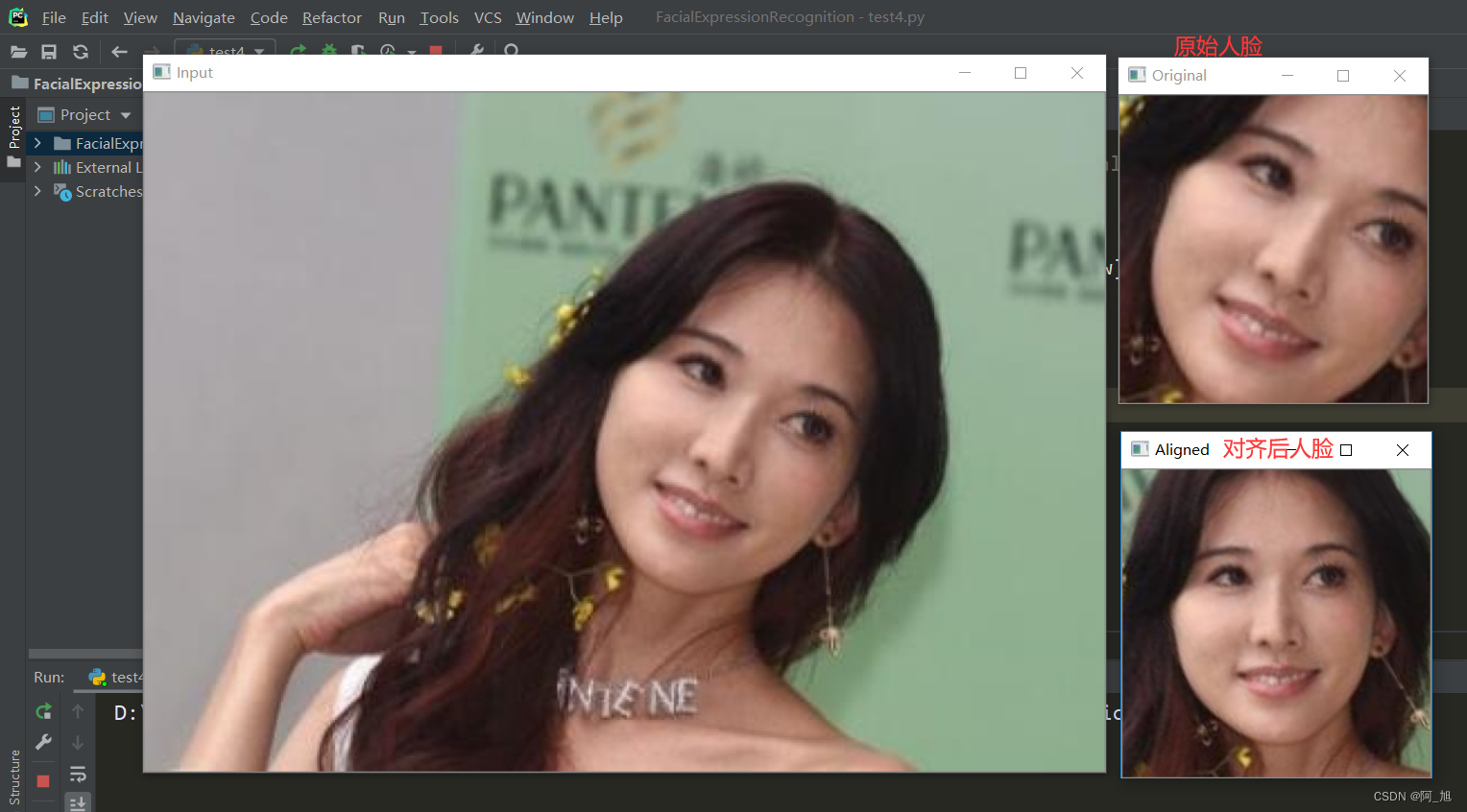

(1)使用 get_face_chip()方法实验结果:

import cv2

import dlib

import matplotlib.pyplot as plt# 获取图片

image = cv2.imread('33.jpg')image_gray = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)# 使用特征提取器get_frontal_face_detector

detector = dlib.get_frontal_face_detector()dets = detector(image_gray, 1)for det in dets:# 将框画在原图上# cv2.rectangle 参数1:图片, 参数2:左上角坐标, 参数2:左上角坐标, 参数3:右下角坐标, 参数4:颜色(R,G,B), 参数2:粗细my_img = cv2.rectangle(image, (det.left(), det.top()), (det.right(), det.bottom()), (0, 255, 0), 2)cv2.imshow("image", image)

cv2.waitKey(0)# 人脸检测器

predictor = dlib.shape_predictor(r'./shape_predictor_68_face_landmarks.dat')

#

for det in dets:shape = predictor(image, det)# 将关键点绘制到人脸上for i in range(68):cv2.putText(image, str(i), (shape.part(i).x, shape.part(i).y), cv2.FONT_HERSHEY_DUPLEX, 0.1, (0, 255,0 ), 1, cv2.LINE_AA)cv2.circle(image, (shape.part(i).x, shape.part(i).y), 1, (0, 0, 255))cv2.imshow("rotated", image)

cv2.waitKey(0)# 人脸对齐

image = dlib.get_face_chip(image, shape, size = 150)

cv2.imshow("68landmarks", image)

cv2.waitKey(0)原理参考:博文1 博文2

(2)使用68点关键点模型方法如下:

人脸对齐思路:

- 分别计算左、右眼中心坐标

- 计算左右眼中心坐标连线与水平方向的夹角θ

- 计算左右两眼整体中心坐标

- 以左右两眼整体中心坐标为基点,将图片array逆时针旋转θ

实验结果如下:

import cv2

import dlib

import numpy as npclass Face_Align(object):def __init__(self, shape_predictor_path):self.detector = dlib.get_frontal_face_detector()self.predictor = dlib.shape_predictor(shape_predictor_path)self.LEFT_EYE_INDICES = [36, 37, 38, 39, 40, 41]self.RIGHT_EYE_INDICES = [42, 43, 44, 45, 46, 47]def rect_to_tuple(self, rect):left = rect.left()right = rect.right()top = rect.top()bottom = rect.bottom()return left, top, right, bottomdef extract_eye(self, shape, eye_indices):points = map(lambda i: shape.part(i), eye_indices)return list(points)def extract_eye_center(self, shape, eye_indices):points = self.extract_eye(shape, eye_indices)xs = map(lambda p: p.x, points)ys = map(lambda p: p.y, points)return sum(xs) // 6, sum(ys) // 6def extract_left_eye_center(self, shape):return self.extract_eye_center(shape, self.LEFT_EYE_INDICES)def extract_right_eye_center(self, shape):return self.extract_eye_center(shape, self.RIGHT_EYE_INDICES)def angle_between_2_points(self, p1, p2):x1, y1 = p1x2, y2 = p2tan = (y2 - y1) / (x2 - x1)print("旋转角度:",np.degrees(np.arctan(tan)))return np.degrees(np.arctan(tan))def get_rotation_matrix(self, p1, p2):angle = self.angle_between_2_points(p1, p2)x1, y1 = p1x2, y2 = p2xc = (x1 + x2) // 2yc = (y1 + y2) // 2M = cv2.getRotationMatrix2D((xc, yc), angle, 1)print("旋转矩阵:",M)return Mdef crop_image(self, image, det):left, top, right, bottom = self.rect_to_tuple(det)return image[top:bottom, left:right]def __call__(self, image=None, image_path=None, save_path=None, only_one=True):'''Face alignment, can select input image variable or image path, when inputimage format that return alignment face image crop or image path as inputwill return None but save image to the save path.:image: Face image input:image_path: if image is None than can input image:save_path: path to save image:detector: detector = dlib.get_frontal_face_detector():predictor: predictor = dlib.shape_predictor("shape_predictor_68_face_landmarks.dat")'''if image is not None:# convert BGR format to Grayimage_gray = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)elif image_path is not None:image_gray = cv2.imread(image_path, cv2.IMREAD_GRAYSCALE)image = cv2.imread(image_path)height, width = image.shape[:2]print("原图形状:",image.shape) # 获取图像的形状,返回值是一个包含行数、列数、通道数的元组。# Dector facedets = self.detector(image_gray, 1)# i donate the i_th face detected in imagecrop_images = []for i, det in enumerate(dets):shape = self.predictor(image_gray, det)left_eye = self.extract_left_eye_center(shape)right_eye = self.extract_right_eye_center(shape)M = self.get_rotation_matrix(left_eye, right_eye)rotated = cv2.warpAffine(image, M, (width, height), flags=cv2.INTER_CUBIC)cv2.imshow("xuanzhuan", rotated)cv2.waitKey(0)cropped = self.crop_image(rotated, det)if only_one == True:if save_path is not None:cv2.imwrite(save_path, cropped)return croppedelse:crop_images.append(cropped)return crop_images

if __name__ == "__main__":image = cv2.imread('33.jpg')cv2.imshow("image", image)cv2.waitKey(0)align = Face_Align("./shape_predictor_68_face_landmarks.dat")align = align(image,image_path=None,save_path="test.jpg",only_one=True)print(align.shape)cv2.imshow("align", align)cv2.waitKey(0)原理参考:博文

face_utils.FaceAligner 主要代码

from imutils import face_utilsdetector = dlib.get_frontal_face_detector()

predictor = dlib.shape_predictor("shape_predictor_68_face_landmarks.dat")

fa = face_utils.FaceAligner(predictor, desiredFaceWidth=256)gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

dets = self.detector(gray, 1)

shape= self.predictor(gray, dets[0])frame_align = fa.align(frame, gray, dets[0]) # align face 人脸对齐后裁剪cv2.imshow("frame_align", frame_align)

cv2.waitKey(0)