一、Transform Learning 与 Model Finetune

二、pytorch中的Finetune

一、Transfer Learning 与 Model Finetune

1. 什么是Transfer Learning?

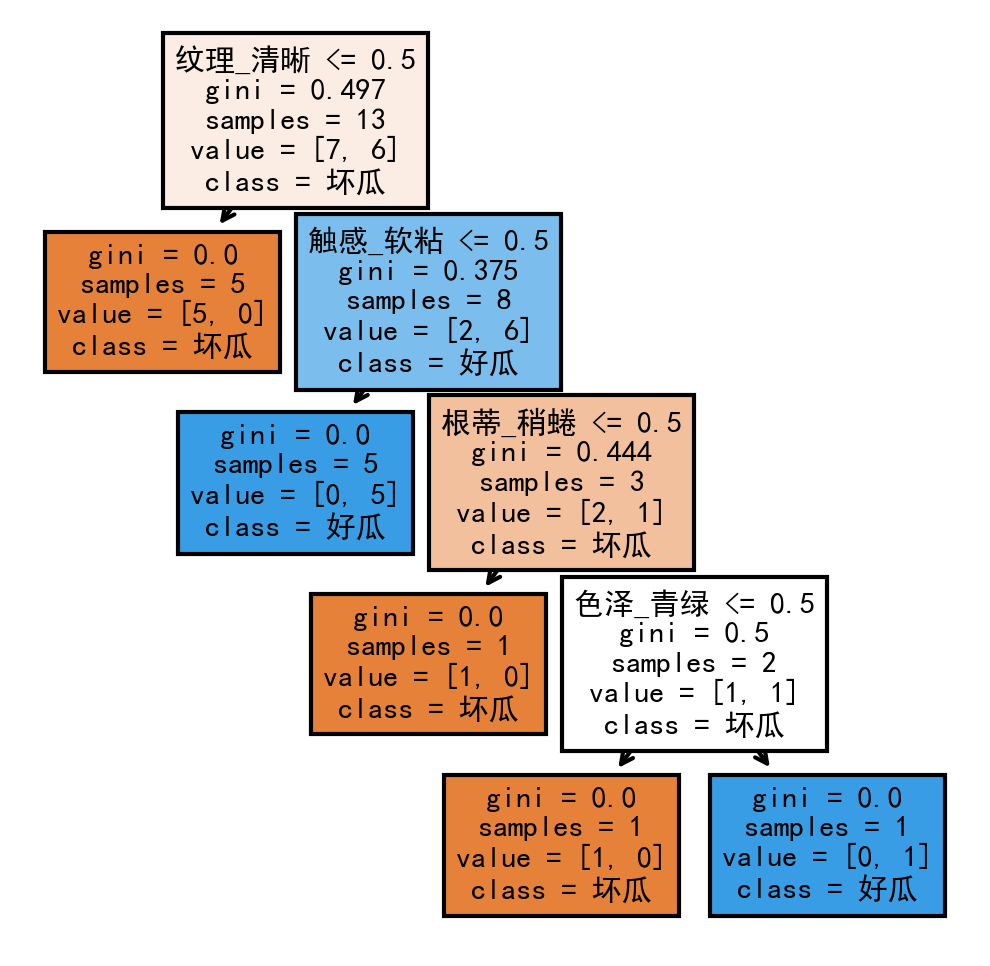

迁移学习是机器学习的一个分支,主要研究源域的知识如何应用到目标域当中。迁移学习是一个很大的概念。

怎么理解源域的知识应用到目标域当中呢?上图是来自一篇迁移学习的综述。左边是传统机器学习的过程,对于不同的任务分别学习得到不同的模型。而右边是迁移学习的示意图,不同的任务会划分为源任务和目标任务,对原任务进行学习,学习到的称之为知识,而我们回利用知识和目标任务进行学习,得到模型。这个模型不仅用到了目标任务,还用到了原任务的知识。

迁移学习就是将源任务的知识应用到目标任务中。

2. 迁移学习与finetune之间的关系

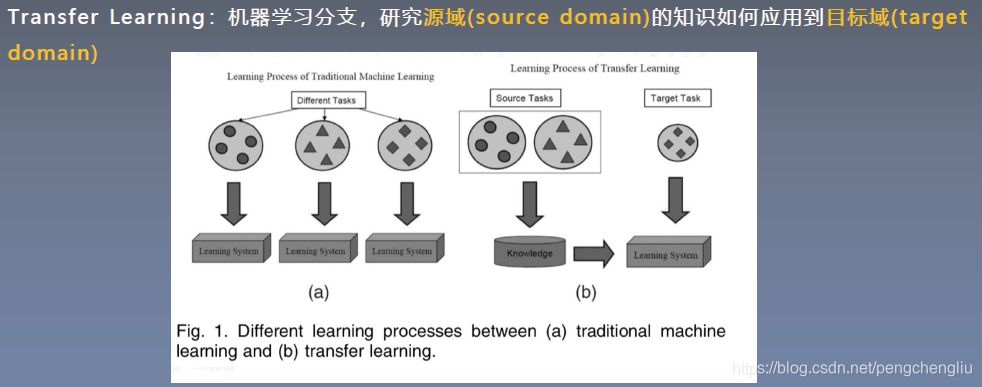

我们训练一个模型,就是不断地更新他的权值。而整个模型最重要的东西也就是他的权值。这个权值呢,也就可以称之为他的知识。而这些知识是可以进行迁移的。我们把这些知识迁移到新任务中,这就是模型微调。

为什么我们使用model finetune这个trick呢?这是因为在新任务中,数据量较小。

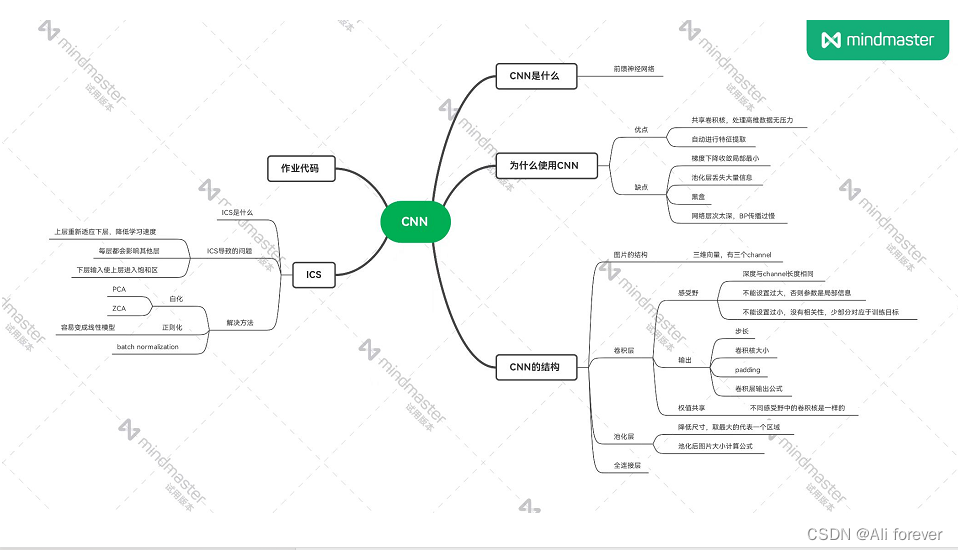

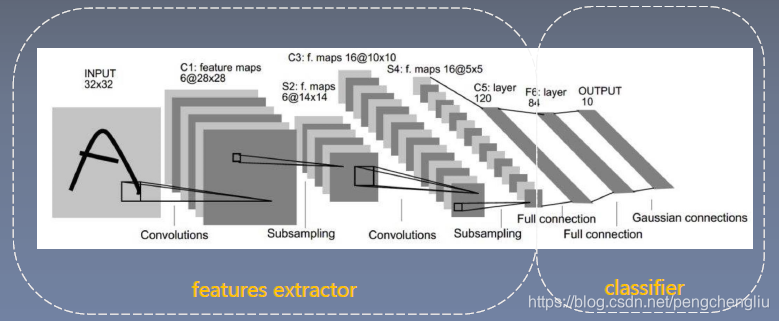

我们来看,神经网络该如何迁移。我们对神经网络,通常会划分为两部分,前面一些列的卷积池化,我们认为是特征提取。后面一些全连接层,我们称之为分类器。

我们对特征提取的部分,认为是比较有共性的地方。而分类器的参数呢,我们认为它与具体的任务有关,通常需要去改变。在这里,有个非常重要的地方,通常都要去改变,这就是最后一个输出层。比如原来是千分类任务,这里是二分类任务,这就需要改变。

二、pytorch中的Finetune

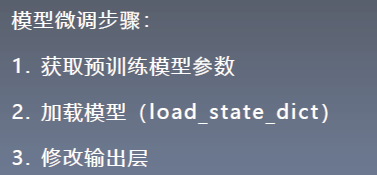

下面我们来看模型finetune需要哪些步骤。

构建好模型之后,在训练时也会常用一些trick。

1. 固定预训练的参数(两种方法:(1) requires_grad = False (2)学习率设为0)

2. 使用较小的学习率。这时候就要用到params_group(参数组)的概念,让不同的部分学习率不同。

三、举例

下面使用Resnet-18进行finetune,来完成时频图二分类任务。

(1)准备工作

模型下载:https://download.pytorch.org/models/resnet18-5c106cde.pth

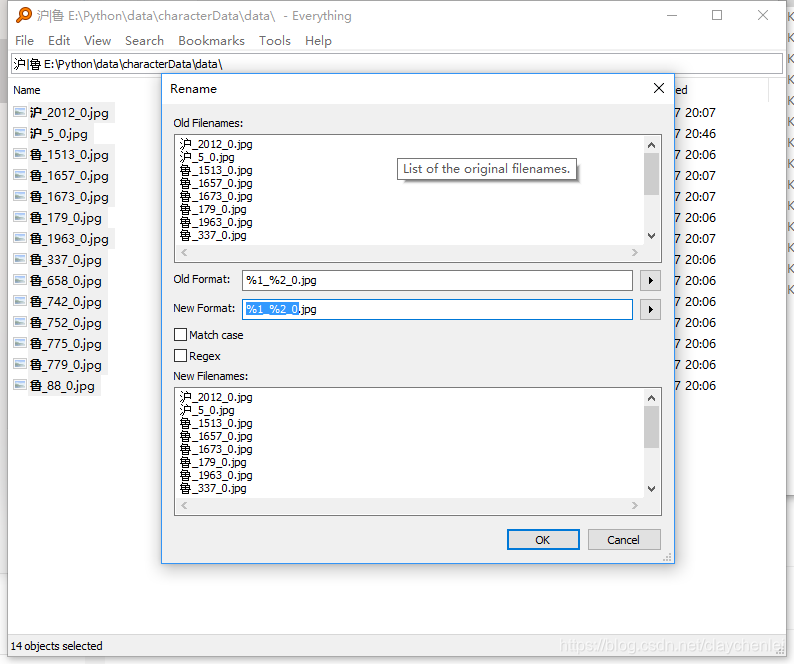

数据准备:

|----data

|----pubu #下载的数据集。

|----train

|----saopin

|----wurenji

|----test

|----resnet18-5c106cde.pth #预训练的模型

|----src

|-----finetune_resnet18.py

|----tools #通用的一些函数

|----my_dataset.py #Dataset

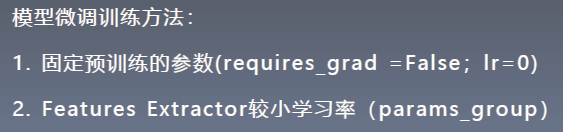

(2)resnet18模型结构

首先是卷积,BN,ReLU,Pool这么一组操作,我们认为是初步的特征提取。

然后是4个残差的blok,会进行一系列的特征提取。

再后面是一个池化。

最后是fc。这个fc是1000分类的任务。

(3)代码

例1:不使用trick:所有的参数使用同一个学习率。

finetune_resnet18_1.py

# -*- coding: utf-8 -*-

"""

# @file name : finetune_resnet18_1.py

# @brief : 模型finetune方法,方法一:使用同一个学习率。

"""

import os

import numpy as np

import torch

import torch.nn as nn

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

import torch.optim as optim

from matplotlib import pyplot as pltimport sys

hello_pytorch_DIR = os.path.abspath(os.path.dirname(__file__)+os.path.sep+".."+os.path.sep+"..")

sys.path.append(hello_pytorch_DIR)from tools.my_dataset import PubuDataset

from tools.common_tools import set_seed

import torchvision.models as models

import torchvision

BASEDIR = os.path.dirname(os.path.abspath(__file__))

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print("use device :{}".format(device))set_seed(1) # 设置随机种子

label_name = {"ants": 0, "bees": 1}# 参数设置

MAX_EPOCH = 25

BATCH_SIZE = 16

LR = 0.001

log_interval = 10

val_interval = 1

classes = 2

start_epoch = -1

lr_decay_step = 7# ============================ step 1/5 数据 ============================

data_dir = os.path.abspath(os.path.join(BASEDIR, "..", "data", "pubu"))

if not os.path.exists(data_dir):raise Exception("\n{} 不存在,请下载 07-02-数据-模型finetune.zip 放到\n{} 下,并解压即可".format(data_dir, os.path.dirname(data_dir)))train_dir = os.path.join(data_dir, "train")

valid_dir = os.path.join(data_dir, "val")norm_mean = [0.485, 0.456, 0.406]

norm_std = [0.229, 0.224, 0.225]train_transform = transforms.Compose([transforms.RandomResizedCrop(224),transforms.RandomHorizontalFlip(),transforms.ToTensor(),transforms.Normalize(norm_mean, norm_std),

])valid_transform = transforms.Compose([transforms.Resize(256),transforms.CenterCrop(224),transforms.ToTensor(),transforms.Normalize(norm_mean, norm_std),

])# 构建MyDataset实例

train_data = PubuDataset(data_dir=train_dir, transform=train_transform)

valid_data = PubuDataset(data_dir=valid_dir, transform=valid_transform)# 构建DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

valid_loader = DataLoader(dataset=valid_data, batch_size=BATCH_SIZE)# ============================ step 2/5 模型 ============================# 1/3 构建模型

resnet18_ft = models.resnet18()# 2/3 加载参数

path_pretrained_model = os.path.join(BASEDIR, "..", "data", "resnet18-5c106cde.pth")

state_dict_load = torch.load(path_pretrained_model) #加载字典state_dict

resnet18_ft.load_state_dict(state_dict_load) #把state_dict放到模型中,这样就改变了原来的参数# 3/3 替换fc层

num_ftrs = resnet18_ft.fc.in_features #从原始的fc层获取输入有多少个神经元,给下面用。

resnet18_ft.fc = nn.Linear(num_ftrs, classes) #构建一个新的Linear,输出神经元个数为分类数classes,输入为多少个神经元根据上一句得到。然后用这个Linear覆盖fc层。resnet18_ft.to(device)

# ============================ step 3/5 损失函数 ============================

criterion = nn.CrossEntropyLoss() # 选择损失函数# ============================ step 4/5 优化器 ============================optimizer = optim.SGD(resnet18_ft.parameters(), lr=LR, momentum=0.9) # 选择优化器。使用相同的学习率。scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=lr_decay_step, gamma=0.1) # 设置学习率下降策略# ============================ step 5/5 训练 ============================

train_curve = list()

valid_curve = list()for epoch in range(start_epoch + 1, MAX_EPOCH):loss_mean = 0.correct = 0.total = 0.resnet18_ft.train()for i, data in enumerate(train_loader):# forwardinputs, labels = datainputs, labels = inputs.to(device), labels.to(device)outputs = resnet18_ft(inputs)# backwardoptimizer.zero_grad()loss = criterion(outputs, labels)loss.backward()# update weightsoptimizer.step()# 统计分类情况_, predicted = torch.max(outputs.data, 1)total += labels.size(0)correct += (predicted == labels).squeeze().cpu().sum().numpy()# 打印训练信息loss_mean += loss.item()train_curve.append(loss.item())if (i+1) % log_interval == 0:loss_mean = loss_mean / log_intervalprint("Training:Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(epoch, MAX_EPOCH, i+1, len(train_loader), loss_mean, correct / total))loss_mean = 0.# if flag_m1:print("epoch:{} conv1.weights[0, 0, ...] :\n {}".format(epoch, resnet18_ft.conv1.weight[0, 0, ...]))scheduler.step() # 更新学习率# validate the modelif (epoch+1) % val_interval == 0:correct_val = 0.total_val = 0.loss_val = 0.resnet18_ft.eval()with torch.no_grad():for j, data in enumerate(valid_loader):inputs, labels = datainputs, labels = inputs.to(device), labels.to(device)outputs = resnet18_ft(inputs)loss = criterion(outputs, labels)_, predicted = torch.max(outputs.data, 1)total_val += labels.size(0)correct_val += (predicted == labels).squeeze().cpu().sum().numpy()loss_val += loss.item()loss_val_mean = loss_val/len(valid_loader)valid_curve.append(loss_val_mean)print("Valid:\t Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(epoch, MAX_EPOCH, j+1, len(valid_loader), loss_val_mean, correct_val / total_val))resnet18_ft.train()train_x = range(len(train_curve))

train_y = train_curvetrain_iters = len(train_loader)

valid_x = np.arange(1, len(valid_curve)+1) * train_iters*val_interval # 由于valid中记录的是epochloss,需要对记录点进行转换到iterations

valid_y = valid_curveplt.plot(train_x, train_y, label='Train')

plt.plot(valid_x, valid_y, label='Valid')plt.legend(loc='upper right')

plt.ylabel('loss value')

plt.xlabel('Iteration')

plt.show()其中,Dataset为:

# -*- coding: utf-8 -*-

"""

# @file name : dataset.py

# @brief : 各数据集的Dataset定义

"""

import numpy as np

import torch

import os

import random

from PIL import Image

from torch.utils.data import Datasetrandom.seed(1)class PubuDataset(Dataset):def __init__(self, data_dir, transform=None):self.label_name = {"saopin": 0, "wurenji": 1}self.data_info = self.get_img_info(data_dir) #data_info是一个list。((data/train/saopin/xxx.bmp, 0), (data/train/saopin/xxx.bmp, 0), ...)self.transform = transformdef __getitem__(self, index): #拿到样本的序号,返回图片以及标签。千万不要做过多的操作。path_img, label = self.data_info[index]img = Image.open(path_img).convert('RGB')if self.transform is not None:img = self.transform(img)return img, labeldef __len__(self):return len(self.data_info)def get_img_info(self, data_dir): #解析数据。结果:((图片1,标签1),(图片2,标签2),...)data_info = list()for root, dirs, _ in os.walk(data_dir):# 遍历类别for sub_dir in dirs: #sub_dir: saopin、wurenjiimg_names = os.listdir(os.path.join(root, sub_dir))img_names = list(filter(lambda x: x.endswith('.bmp'), img_names)) #过滤出img_names中以'.bmp'结尾的图片,保存成list# 遍历图片for i in range(len(img_names)):img_name = img_names[i]path_img = os.path.join(root, sub_dir, img_name) #得到包含完整路径的图片名label = self.label_name[sub_dir]data_info.append((path_img, int(label)))if len(data_info) == 0:raise Exception("\ndata_dir:{} is a empty dir! Please checkout your path to images!".format(data_dir))return data_info

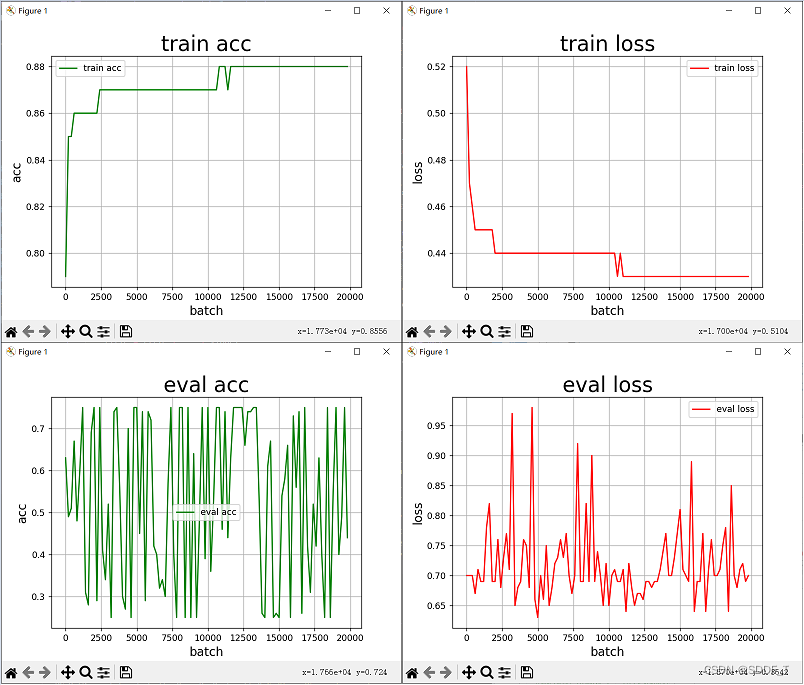

结果:第3轮的时候,分类效果已经达到97%了。说明finetune很有效果。

例2:trick1: 冻结卷积层的学习率。

# -*- coding: utf-8 -*-

"""

# @file name : finetune_resnet18_2.py

# @brief : 模型finetune方法,trick 1:冻结卷积层的学习率。

"""

import os

import numpy as np

import torch

import torch.nn as nn

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

import torch.optim as optim

from matplotlib import pyplot as pltimport sys

hello_pytorch_DIR = os.path.abspath(os.path.dirname(__file__)+os.path.sep+".."+os.path.sep+"..")

sys.path.append(hello_pytorch_DIR)from tools.my_dataset import PubuDataset

from tools.common_tools import set_seed

import torchvision.models as models

import torchvision

BASEDIR = os.path.dirname(os.path.abspath(__file__))

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print("use device :{}".format(device))set_seed(1) # 设置随机种子

label_name = {"ants": 0, "bees": 1}# 参数设置

MAX_EPOCH = 25

BATCH_SIZE = 16

LR = 0.001

log_interval = 10

val_interval = 1

classes = 2

start_epoch = -1

lr_decay_step = 7# ============================ step 1/5 数据 ============================

data_dir = os.path.abspath(os.path.join(BASEDIR, "..", "data", "pubu"))

if not os.path.exists(data_dir):raise Exception("\n{} 不存在,请下载 07-02-数据-模型finetune.zip 放到\n{} 下,并解压即可".format(data_dir, os.path.dirname(data_dir)))train_dir = os.path.join(data_dir, "train")

valid_dir = os.path.join(data_dir, "val")norm_mean = [0.485, 0.456, 0.406]

norm_std = [0.229, 0.224, 0.225]train_transform = transforms.Compose([transforms.RandomResizedCrop(224),transforms.RandomHorizontalFlip(),transforms.ToTensor(),transforms.Normalize(norm_mean, norm_std),

])valid_transform = transforms.Compose([transforms.Resize(256),transforms.CenterCrop(224),transforms.ToTensor(),transforms.Normalize(norm_mean, norm_std),

])# 构建MyDataset实例

train_data = PubuDataset(data_dir=train_dir, transform=train_transform)

valid_data = PubuDataset(data_dir=valid_dir, transform=valid_transform)# 构建DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

valid_loader = DataLoader(dataset=valid_data, batch_size=BATCH_SIZE)# ============================ step 2/5 模型 ============================# 1/3 构建模型

resnet18_ft = models.resnet18()# 2/3 加载参数

path_pretrained_model = os.path.join(BASEDIR, "..", "data", "resnet18-5c106cde.pth")

state_dict_load = torch.load(path_pretrained_model) #加载字典state_dict

resnet18_ft.load_state_dict(state_dict_load) #把state_dict放到模型中,这样就改变了原来的参数# 法1 : 冻结卷积层

for param in resnet18_ft.parameters():param.requires_grad = False

print("conv1.weights[0, 0, ...]:\n {}".format(resnet18_ft.conv1.weight[0, 0, ...]))# 3/3 替换fc层

num_ftrs = resnet18_ft.fc.in_features #从原始的fc层获取输入有多少个神经元,给下面用。

resnet18_ft.fc = nn.Linear(num_ftrs, classes) #构建一个新的Linear,输出神经元个数为分类数classes,输入为多少个神经元根据上一句得到。然后用这个Linear覆盖fc层。resnet18_ft.to(device)

# ============================ step 3/5 损失函数 ============================

criterion = nn.CrossEntropyLoss() # 选择损失函数# ============================ step 4/5 优化器 ============================optimizer = optim.SGD(resnet18_ft.parameters(), lr=LR, momentum=0.9) # 选择优化器scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=lr_decay_step, gamma=0.1) # 设置学习率下降策略# ============================ step 5/5 训练 ============================

train_curve = list()

valid_curve = list()for epoch in range(start_epoch + 1, MAX_EPOCH):loss_mean = 0.correct = 0.total = 0.resnet18_ft.train()for i, data in enumerate(train_loader):# forwardinputs, labels = datainputs, labels = inputs.to(device), labels.to(device)outputs = resnet18_ft(inputs)# backwardoptimizer.zero_grad()loss = criterion(outputs, labels)loss.backward()# update weightsoptimizer.step()# 统计分类情况_, predicted = torch.max(outputs.data, 1)total += labels.size(0)correct += (predicted == labels).squeeze().cpu().sum().numpy()# 打印训练信息loss_mean += loss.item()train_curve.append(loss.item())if (i+1) % log_interval == 0:loss_mean = loss_mean / log_intervalprint("Training:Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(epoch, MAX_EPOCH, i+1, len(train_loader), loss_mean, correct / total))loss_mean = 0.# if flag_m1:print("epoch:{} conv1.weights[0, 0, ...] :\n {}".format(epoch, resnet18_ft.conv1.weight[0, 0, ...]))scheduler.step() # 更新学习率# validate the modelif (epoch+1) % val_interval == 0:correct_val = 0.total_val = 0.loss_val = 0.resnet18_ft.eval()with torch.no_grad():for j, data in enumerate(valid_loader):inputs, labels = datainputs, labels = inputs.to(device), labels.to(device)outputs = resnet18_ft(inputs)loss = criterion(outputs, labels)_, predicted = torch.max(outputs.data, 1)total_val += labels.size(0)correct_val += (predicted == labels).squeeze().cpu().sum().numpy()loss_val += loss.item()loss_val_mean = loss_val/len(valid_loader)valid_curve.append(loss_val_mean)print("Valid:\t Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(epoch, MAX_EPOCH, j+1, len(valid_loader), loss_val_mean, correct_val / total_val))resnet18_ft.train()train_x = range(len(train_curve))

train_y = train_curvetrain_iters = len(train_loader)

valid_x = np.arange(1, len(valid_curve)+1) * train_iters*val_interval # 由于valid中记录的是epochloss,需要对记录点进行转换到iterations

valid_y = valid_curveplt.plot(train_x, train_y, label='Train')

plt.plot(valid_x, valid_y, label='Valid')plt.legend(loc='upper right')

plt.ylabel('loss value')

plt.xlabel('Iteration')

plt.show()结果:

发现果然参数不再更新了。

例3:trick2:不同参数不同学习率

# -*- coding: utf-8 -*-

"""

# @file name : finetune_resnet18.py

# @brief : 模型finetune方法:不同参数不同的学习率

"""

import os

import numpy as np

import torch

import torch.nn as nn

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

import torch.optim as optim

from matplotlib import pyplot as pltimport sys

hello_pytorch_DIR = os.path.abspath(os.path.dirname(__file__)+os.path.sep+".."+os.path.sep+"..")

sys.path.append(hello_pytorch_DIR)from tools.my_dataset import PubuDataset

from tools.common_tools import set_seed

import torchvision.models as models

import torchvision

BASEDIR = os.path.dirname(os.path.abspath(__file__))

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print("use device :{}".format(device))set_seed(1) # 设置随机种子

label_name = {"ants": 0, "bees": 1}# 参数设置

MAX_EPOCH = 25

BATCH_SIZE = 16

LR = 0.001

log_interval = 10

val_interval = 1

classes = 2

start_epoch = -1

lr_decay_step = 7# ============================ step 1/5 数据 ============================

data_dir = os.path.abspath(os.path.join(BASEDIR, "..", "data", "pubu"))

if not os.path.exists(data_dir):raise Exception("\n{} 不存在,请下载 07-02-数据-模型finetune.zip 放到\n{} 下,并解压即可".format(data_dir, os.path.dirname(data_dir)))train_dir = os.path.join(data_dir, "train")

valid_dir = os.path.join(data_dir, "val")norm_mean = [0.485, 0.456, 0.406]

norm_std = [0.229, 0.224, 0.225]train_transform = transforms.Compose([transforms.RandomResizedCrop(224),transforms.RandomHorizontalFlip(),transforms.ToTensor(),transforms.Normalize(norm_mean, norm_std),

])valid_transform = transforms.Compose([transforms.Resize(256),transforms.CenterCrop(224),transforms.ToTensor(),transforms.Normalize(norm_mean, norm_std),

])# 构建MyDataset实例

train_data = PubuDataset(data_dir=train_dir, transform=train_transform)

valid_data = PubuDataset(data_dir=valid_dir, transform=valid_transform)# 构建DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

valid_loader = DataLoader(dataset=valid_data, batch_size=BATCH_SIZE)# ============================ step 2/5 模型 ============================# 1/3 构建模型

resnet18_ft = models.resnet18()# 2/3 加载参数

path_pretrained_model = os.path.join(BASEDIR, "..", "data", "resnet18-5c106cde.pth")

state_dict_load = torch.load(path_pretrained_model) #加载字典state_dict

resnet18_ft.load_state_dict(state_dict_load) #把state_dict放到模型中,这样就改变了原来的参数# 3/3 替换fc层

num_ftrs = resnet18_ft.fc.in_features #从原始的fc层获取输入有多少个神经元,给下面用。

resnet18_ft.fc = nn.Linear(num_ftrs, classes) #构建一个新的Linear,输出神经元个数为分类数classes,输入为多少个神经元根据上一句得到。然后用这个Linear覆盖fc层。resnet18_ft.to(device)

# ============================ step 3/5 损失函数 ============================

criterion = nn.CrossEntropyLoss() # 选择损失函数# ============================ step 4/5 优化器 ============================

# 法2 : conv 小学习率

fc_params_id = list(map(id, resnet18_ft.fc.parameters())) # 返回的是parameters的 内存地址。对fc层获取地址,形成一个list.

base_params = filter(lambda p: id(p) not in fc_params_id, resnet18_ft.parameters()) #过滤掉fc层。也就是前面卷积层的参数。

optimizer = optim.SGD([{'params': base_params, 'lr': LR*0.1}, # 前面卷积层的参数。设置卷积层的学习率,为LR*0.1,比后面的小十倍。如果设为0,表示冻结卷积层。{'params': resnet18_ft.fc.parameters(), 'lr': LR}], momentum=0.9) #fc层的学习率。scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=lr_decay_step, gamma=0.1) # 设置学习率下降策略# ============================ step 5/5 训练 ============================

train_curve = list()

valid_curve = list()for epoch in range(start_epoch + 1, MAX_EPOCH):loss_mean = 0.correct = 0.total = 0.resnet18_ft.train()for i, data in enumerate(train_loader):# forwardinputs, labels = datainputs, labels = inputs.to(device), labels.to(device)outputs = resnet18_ft(inputs)# backwardoptimizer.zero_grad()loss = criterion(outputs, labels)loss.backward()# update weightsoptimizer.step()# 统计分类情况_, predicted = torch.max(outputs.data, 1)total += labels.size(0)correct += (predicted == labels).squeeze().cpu().sum().numpy()# 打印训练信息loss_mean += loss.item()train_curve.append(loss.item())if (i+1) % log_interval == 0:loss_mean = loss_mean / log_intervalprint("Training:Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(epoch, MAX_EPOCH, i+1, len(train_loader), loss_mean, correct / total))loss_mean = 0.scheduler.step() # 更新学习率# validate the modelif (epoch+1) % val_interval == 0:correct_val = 0.total_val = 0.loss_val = 0.resnet18_ft.eval()with torch.no_grad():for j, data in enumerate(valid_loader):inputs, labels = datainputs, labels = inputs.to(device), labels.to(device)outputs = resnet18_ft(inputs)loss = criterion(outputs, labels)_, predicted = torch.max(outputs.data, 1)total_val += labels.size(0)correct_val += (predicted == labels).squeeze().cpu().sum().numpy()loss_val += loss.item()loss_val_mean = loss_val/len(valid_loader)valid_curve.append(loss_val_mean)print("Valid:\t Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(epoch, MAX_EPOCH, j+1, len(valid_loader), loss_val_mean, correct_val / total_val))resnet18_ft.train()train_x = range(len(train_curve))

train_y = train_curvetrain_iters = len(train_loader)

valid_x = np.arange(1, len(valid_curve)+1) * train_iters*val_interval # 由于valid中记录的是epochloss,需要对记录点进行转换到iterations

valid_y = valid_curveplt.plot(train_x, train_y, label='Train')

plt.plot(valid_x, valid_y, label='Valid')plt.legend(loc='upper right')

plt.ylabel('loss value')

plt.xlabel('Iteration')

plt.show()