数据处理模块

数据介绍

语料介绍一下:

data文件夹有如下文件:

cn.txt:中文语料,里面的句子都已经分好了词。

en.txt: 英语对齐语料,里面的单词也分词完毕。

cn.text.txt: 中文测试集语料

en.tetx.txt:英语对齐测试集语料

cn.txt.vab:中文词典文件

en.txt.vab:英语词典文件

语料一共才6000多个对齐的句子。因此,在生成词典的时候,没有做词频过滤。否则输入到模型的数据会有很多的<unk>,模型性能会相当差。

处理逻辑

主要是划分数据集,生成训练集合验证集合。然后实现next_trian_batch和next_test_batch函数。

当这个类用于测试的时候,把seperate设置为1即可,这个所有的数据都会归入到验证集,然后拿他们来测试。

__author__ = 'jmh081701'

import json

import copy

import numpy as np

import randomclass DATAPROCESS:def __init__(self,source_ling_path,dest_ling_path,source_word_embedings_path,source_vocb_path,dest_word_embeddings_path,dest_vocb_path,seperate_rate=0.05,batch_size=100):self.src_data_path =source_ling_path #源语self.dst_data_path =dest_ling_path #目标语对应翻译结果self.src_word_embedding_path = source_word_embedings_path #中文预训练的词向量self.src_vocb_path = source_vocb_path #预训练好的中文词典self.dst_word_embedding_path=dest_word_embeddings_path #预训练好的 英文单词词向量self.dst_vocb_path = dest_vocb_path #预训练好的英文词典self.seperate_rate =seperate_rate #测试集 训练集 划分比率self.batch_size = batch_sizeself.src_sentence_length = 23 #截断或填充的句子长度,全部统一self.dst_sentence_length = 30#data structure to buildself.src_data_raw=[] #全部数据集self.dst_data_raw =[]self.src_train_raw=[] #训练集self.dst_train_raw = []self.src_test_raw =[] #测试集self.dst_test_raw =[]self.src_word_embeddings=None #中文词 词向量以及词典self.src_id2word=Noneself.src_word2id=Noneself.src_embedding_length =0self.dst_word_embeddings=None #英文 词向量以及词典self.dst_id2word=Noneself.dst_word2id=Noneself.dst_embedding_length =0self.__load_wordebedding()self.__load_data()self.last_batch=0self.epoch =0self.dst_vocb_size = len(self.dst_word2id)def __load_wordebedding(self):self.src_word_embeddings=np.load(self.src_word_embedding_path)self.embedding_length = np.shape(self.src_word_embeddings)[-1]with open(self.src_vocb_path,encoding="utf8") as fp:self.src_id2word = json.load(fp)self.src_word2id={}for each in self.src_id2word:self.src_word2id.setdefault(self.src_id2word[each],each)self.dst_word_embeddings=np.load(self.dst_word_embedding_path)self.embedding_length = np.shape(self.dst_word_embeddings)[-1]with open(self.dst_vocb_path,encoding="utf8") as fp:self.dst_id2word = json.load(fp)self.dst_word2id={}for each in self.dst_id2word:self.dst_word2id.setdefault(self.dst_id2word[each],each)def __load_data(self):with open(self.src_data_path,encoding='utf8') as fp:train_data_rawlines=fp.readlines()with open(self.dst_data_path,encoding='utf8') as fp:train_label_rawlines=fp.readlines()total_lines = len(train_data_rawlines)assert len(train_data_rawlines)==len(train_label_rawlines)src_len=[]dst_len=[]for index in range(total_lines):data_line = train_data_rawlines[index].split(" ")[:-1]label_line = train_label_rawlines[index].split(" ")[:-1]label_line =["<START>"]+label_line+["<END>"] #在目标语中的每个句子的一头一尾添加开始翻译和结束翻译的标记#这个是必不可少的!#源语料句子并没有这个要求,可加可不加#add and seperate valid ,train set.data=[int(self.src_word2id.get(each,0)) for each in data_line]label=[int(self.dst_word2id.get(each,0)) for each in label_line]src_len.append(len(data))dst_len.append(len(label))self.src_data_raw.append(data)self.dst_data_raw.append(label)if random.uniform(0,1) <self.seperate_rate:self.src_test_raw.append(data)self.dst_test_raw.append(label)else:self.src_train_raw.append(data)self.dst_train_raw.append(label)self.src_len_std=np.std(src_len)self.src_len_mean=np.mean(src_len)self.src_len_max=np.max(src_len)self.src_len_min=np.min(src_len)self.dst_len_std=np.std(dst_len)self.dst_len_mean=np.mean(dst_len)self.dst_len_max = np.max(dst_len)self.dst_len_min=np.min(dst_len)self.train_batches= [i for i in range(int(len(self.src_train_raw)/self.batch_size) -1)]self.train_batch_index = 0self.test_batches= [i for i in range(int(len(self.src_test_raw)/self.batch_size) -1)]self.test_batch_index = 0def pad_sequence(self,sequence,object_length,pad_value=None):''':param sequence: 待填充的序列:param object_length: 填充的目标长度:return:'''sequence =copy.deepcopy(sequence)if pad_value is None:sequence = sequence*(1+int((0.5+object_length)/(len(sequence))))sequence = sequence[:object_length]else:sequence = sequence+[pad_value]*(object_length- len(sequence))return sequencedef next_train_batch(self):#paddingoutput_x=[]output_label=[]src_sequence_length=[]dst_sequence_length=[]index =self.train_batches[self.train_batch_index]self.train_batch_index =(self.train_batch_index +1 ) % len(self.train_batches)if self.train_batch_index is 0:self.epoch +=1datas = self.src_train_raw[index*self.batch_size:(index+1)*self.batch_size]labels = self.dst_train_raw[index*self.batch_size:(index+1)*self.batch_size]for index in range(self.batch_size):#复制填充data= self.pad_sequence(datas[index],self.src_sentence_length,pad_value=int(self.src_word2id['<END>'])) #源语label = self.pad_sequence(labels[index],self.dst_sentence_length,pad_value=int(self.dst_word2id['<END>'])) #目标语label[-1]=int(self.dst_word2id['<END>']) #确保,目标语句子的尾部一定是一个ENDoutput_x.append(data)output_label.append(label)src_sequence_length.append(min(self.src_sentence_length,len(datas[index])))dst_sequence_length.append(min(self.dst_sentence_length,len(label)))return output_x,output_label,src_sequence_length,dst_sequence_length#返回的都是下标,注意src(dst)_sequence_length是有效的长度def next_test_batch(self):output_x=[]output_label=[]src_sequence_length=[]dst_sequence_length=[]index =self.test_batches[self.test_batch_index]self.test_batch_index =(self.test_batch_index +1 ) % len(self.test_batches)datas = self.src_test_raw[index*self.batch_size:(index+1)*self.batch_size]labels = self.dst_test_raw[index*self.batch_size:(index+1)*self.batch_size]for index in range(len(datas)):#复制填充data= self.pad_sequence(datas[index],self.src_sentence_length,pad_value=int(self.src_word2id['<END>']))label = self.pad_sequence(labels[index],self.dst_sentence_length,pad_value=int(self.dst_word2id['<END>']))output_x.append(data)output_label.append(label)src_sequence_length.append(min(self.src_sentence_length,len(datas[index])))dst_sequence_length.append(min(self.dst_sentence_length,len(labels[index])))return output_x,output_label,src_sequence_length,dst_sequence_lengthdef test_data(self):output_x=[]output_label=[]src_sequence_length=[]dst_sequence_length=[]datas = self.src_test_raw[0:]labels = self.dst_test_raw[0:]for index in range(len(datas)):#复制填充data= self.pad_sequence(datas[index],self.src_sentence_length,pad_value=int(self.src_word2id['<END>']))label = self.pad_sequence(labels[index],self.dst_sentence_length,pad_value=int(self.dst_word2id['<END>']))output_x.append(data)output_label.append(label)src_sequence_length.append(min(self.src_sentence_length,len(datas[index])))dst_sequence_length.append(min(self.dst_sentence_length,len(labels[index])))start=0end=len(datas)while len(output_x)< self.batch_size:#不满一个batch就填充output_x.append(output_x[start])output_label.append(output_label[start])src_sequence_length.append(src_sequence_length[start])dst_sequence_length.append(dst_sequence_length[start])start=(start+1) % endreturn output_x,output_label,src_sequence_length,dst_sequence_lengthdef src_id2words(self,ids):rst=[]for id in ids:rst.append(self.src_id2word[str(id)])return " ".join(rst)def tgt_id2words(self,ids):rst=[]for id in ids:rst.append(self.dst_id2word[str(id)])return " ".join(rst)

然后统计一下语料的基本信息,最重要的是要知道句子的最大长度,最小长度。

if __name__ == '__main__':dataGen = DATAPROCESS(source_ling_path="data/cn.txt",dest_ling_path="data/en.txt",source_word_embedings_path="data/cn.txt.ebd.npy",source_vocb_path="data/cn.txt.vab",dest_word_embeddings_path="data/en.txt.ebd.npy",dest_vocb_path="data/en.txt.vab",batch_size=5,seperate_rate=0.1)print("-"*10+"src corpus"+'-'*20)print({'std':dataGen.src_len_std,'mean':dataGen.src_len_mean,'max':dataGen.src_len_max,'min':dataGen.src_len_min})print('-'*10+"dst corpus"+'-'*20)print({'std':dataGen.dst_len_std,'mean':dataGen.dst_len_mean,'max':dataGen.dst_len_max,'min':dataGen.dst_len_min})

输出:

----------src corpus--------------------{'std': 1.1084102394696949, 'mean': 21.437810945273633, 'max': 23, 'min': 20}----------dst corpus--------------------{'std': 1.1049706194897553, 'mean': 28.491805677494877, 'max': 30, 'min': 27}

说明,汉语句子中句子最长为23;目标语 英语句子最长是30。

首先,这么短的句子这是好事,不然rnn很容易gg的。一开始,我没有做这个统计工作,直接拍脑袋

self.src_sentence_length = 100 #截断或填充的句子长度,全部统一self.dst_sentence_length = 150

然后效果很差。

翻译模型

本质就是一个encoder-decoder模型,只不过在decoder的BasicDecoder之前 在原来rnn cell的基础上,添加了注意力机制。这个注意力机制直接使用的tensorflow自带的。

#coding:utf8

__author__ = 'jmh081701'

from utils import DATAPROCESS

import tensorflow as tf

batch_size = 300

rnn_size = 200

rnn_num_layers = 1encoder_embedding_size = 100

decoder_embedding_size = 100

# Learning Rate

lr = 0.001

display_step = 10

dataGen = DATAPROCESS(source_ling_path="data/cn.txt",dest_ling_path="data/en.txt",source_word_embedings_path="data/cn.txt.ebd.npy",source_vocb_path="data/cn.txt.vab",dest_word_embeddings_path="data/en.txt.ebd.npy",dest_vocb_path="data/en.txt.vab",batch_size=batch_size,seperate_rate=0.1)def model_inputs():inputs = tf.placeholder(tf.int32, [None, None], name="inputs")targets = tf.placeholder(tf.int32, [None, None], name="targets")learning_rate = tf.placeholder(tf.float32, name="learning_rate")source_sequence_len = tf.placeholder(tf.int32, (None,), name="source_sequence_len")target_sequence_len = tf.placeholder(tf.int32, (None,), name="target_sequence_len")max_target_sequence_len = tf.placeholder(tf.int32, (None,), name="max_target_sequence_len")return inputs, targets, learning_rate, source_sequence_len, target_sequence_len, max_target_sequence_lendef encoder_layer(rnn_inputs, rnn_size, rnn_num_layers,source_sequence_len, source_vocab_size, encoder_embedding_size=100):"""构造Encoder端@param rnn_inputs: rnn的输入@param rnn_size: rnn的隐层结点数@param rnn_num_layers: rnn的堆叠层数@param source_sequence_len: 中文句子序列的长度@param source_vocab_size: 中文词典的大小@param encoder_embedding_size: Encoder层中对单词进行词向量嵌入后的维度"""# 对输入的单词进行词向量嵌入encoder_embed = tf.contrib.layers.embed_sequence(rnn_inputs, source_vocab_size, encoder_embedding_size)# LSTM单元def get_lstm(rnn_size):lstm = tf.contrib.rnn.LSTMCell(rnn_size, initializer=tf.random_uniform_initializer(-0.1, 0.1, seed=123))return lstm# 堆叠rnn_num_layers层LSTMlstms = tf.contrib.rnn.MultiRNNCell([get_lstm(rnn_size) for _ in range(rnn_num_layers)])encoder_outputs, encoder_states = tf.nn.dynamic_rnn(lstms, encoder_embed, source_sequence_len,dtype=tf.float32)return encoder_outputs, encoder_statesdef decoder_layer_inputs(target_data, target_vocab_to_int, batch_size):"""对Decoder端的输入进行处理@param target_data: 目标语数据的tensor@param target_vocab_to_int: 目标语数据的词典到索引的映射: dict@param batch_size: batch size"""# 去掉batch中每个序列句子的最后一个单词ending = tf.strided_slice(target_data, [0, 0], [batch_size, -1], [1, 1])# 在batch中每个序列句子的前面添加”<GO>"decoder_inputs = tf.concat([tf.fill([batch_size, 1], int(target_vocab_to_int["<START>"])),ending], 1)return decoder_inputsdef decoder_layer_train(encoder_states, decoder_cell, decoder_embed,target_sequence_len, max_target_sequence_len, output_layer,encoder_outputs,source_sequence_len):"""Decoder端的训练@param encoder_states: Encoder端编码得到的Context Vector@param decoder_cell: Decoder端@param decoder_embed: Decoder端词向量嵌入后的输入@param target_sequence_len: 英语文本的长度@param max_target_sequence_len: 英语文本的最大长度@param output_layer: 输出层"""# 生成helper对象training_helper = tf.contrib.seq2seq.TrainingHelper(inputs=decoder_embed,sequence_length=target_sequence_len,time_major=False)#添加注意力机制#先定义一个Bahda 注意力机制。它是用一个小的神经网络来做打分的,num_units指明这个小的神经网络的大小attention_mechanism = tf.contrib.seq2seq.BahdanauAttention(num_units=rnn_size,memory=encoder_outputs,memory_sequence_length=source_sequence_len)#在原来rnn的基础上配上一层AttentionWrapperdecoder_cell = tf.contrib.seq2seq.AttentionWrapper(decoder_cell,attention_mechanism,attention_layer_size=rnn_size)#初始状态设置为encoder最后的输出状态training_decoder = tf.contrib.seq2seq.BasicDecoder(decoder_cell,training_helper,decoder_cell.zero_state(batch_size,dtype=tf.float32).clone(cell_state=encoder_states),output_layer)training_decoder_outputs, _, _ = tf.contrib.seq2seq.dynamic_decode(training_decoder,impute_finished=True,maximum_iterations=max_target_sequence_len)return training_decoder_outputsdef decoder_layer_infer(encoder_states, decoder_cell, decoder_embed, start_id, end_id,max_target_sequence_len, output_layer, batch_size,encoder_outputs,source_sequence_len):"""Decoder端的预测/推断@param encoder_states: Encoder端编码得到的Context Vector@param decoder_cell: Decoder端@param decoder_embed: Decoder端词向量嵌入后的输入@param start_id: 句子起始单词的token id, 即"<START>"的编码@param end_id: 句子结束的token id,即"<END>"的编码@param max_target_sequence_len: 英语文本的最大长度@param output_layer: 输出层@batch_size: batch size"""start_tokens = tf.tile(tf.constant([start_id], dtype=tf.int32), [batch_size], name="start_tokens")inference_helper = tf.contrib.seq2seq.GreedyEmbeddingHelper(decoder_embed,start_tokens,end_id)attention_mechanism = tf.contrib.seq2seq.BahdanauAttention(num_units=rnn_size,memory=encoder_outputs,memory_sequence_length=source_sequence_len)#加入attention ,加入的方式与train类似decoder_cell = tf.contrib.seq2seq.AttentionWrapper(decoder_cell,attention_mechanism,attention_layer_size=rnn_size)inference_decoder = tf.contrib.seq2seq.BasicDecoder(decoder_cell,inference_helper,decoder_cell.zero_state(batch_size,dtype=tf.float32).clone(cell_state=encoder_states),output_layer)inference_decoder_outputs, _, _ = tf.contrib.seq2seq.dynamic_decode(inference_decoder,impute_finished=True,maximum_iterations=max_target_sequence_len)return inference_decoder_outputsdef decoder_layer(encoder_states, decoder_inputs, target_sequence_len,max_target_sequence_len, rnn_size, rnn_num_layers,target_vocab_to_int, target_vocab_size, decoder_embedding_size, batch_size,encoder_outputs,source_sequence_length):decoder_embeddings = tf.Variable(tf.random_uniform([target_vocab_size, decoder_embedding_size]))decoder_embed = tf.nn.embedding_lookup(decoder_embeddings, decoder_inputs)def get_lstm(rnn_size):lstm = tf.contrib.rnn.LSTMCell(rnn_size, initializer=tf.random_uniform_initializer(-0.1, 0.1, seed=456))return lstmdecoder_cell = tf.contrib.rnn.MultiRNNCell([get_lstm(rnn_size) for _ in range(rnn_num_layers)])# output_layer logitsoutput_layer = tf.layers.Dense(target_vocab_size)with tf.variable_scope("decoder"):training_logits = decoder_layer_train(encoder_states,decoder_cell,decoder_embed,target_sequence_len,max_target_sequence_len,output_layer,encoder_outputs,source_sequence_length)with tf.variable_scope("decoder", reuse=True):inference_logits = decoder_layer_infer(encoder_states,decoder_cell,decoder_embeddings,int(target_vocab_to_int["<START>"]),int(target_vocab_to_int["<END>"]),max_target_sequence_len,output_layer,batch_size,encoder_outputs,source_sequence_length)return training_logits, inference_logitsdef seq2seq_model(input_data, target_data, batch_size,source_sequence_len, target_sequence_len, max_target_sentence_len,source_vocab_size, target_vocab_size,encoder_embedding_size, decoder_embeding_size,rnn_size, rnn_num_layers, target_vocab_to_int):encoder_outputs, encoder_states = encoder_layer(input_data, rnn_size, rnn_num_layers, source_sequence_len,source_vocab_size, encoder_embedding_size)decoder_inputs = decoder_layer_inputs(target_data, target_vocab_to_int, batch_size)training_decoder_outputs, inference_decoder_outputs = decoder_layer(encoder_states,decoder_inputs,target_sequence_len,max_target_sentence_len,rnn_size,rnn_num_layers,target_vocab_to_int,target_vocab_size,decoder_embeding_size,batch_size,encoder_outputs,source_sequence_len)return training_decoder_outputs, inference_decoder_outputstrain_graph = tf.Graph()with train_graph.as_default():inputs, targets, learning_rate, source_sequence_len, target_sequence_len, _ = model_inputs()max_target_sequence_len = 30train_logits, inference_logits = seq2seq_model(tf.reverse(inputs, [-1]),targets,batch_size,source_sequence_len,target_sequence_len,max_target_sequence_len,len(dataGen.src_word2id),len(dataGen.dst_word2id),encoder_embedding_size,decoder_embedding_size,rnn_size,rnn_num_layers,dataGen.dst_word2id)training_logits = tf.identity(train_logits.rnn_output, name="logits")inference_logits = tf.identity(inference_logits.sample_id, name="predictions")masks = tf.sequence_mask(target_sequence_len, max_target_sequence_len, dtype=tf.float32, name="masks")with tf.name_scope("optimization"):cost = tf.contrib.seq2seq.sequence_loss(training_logits, targets, masks)optimizer = tf.train.AdamOptimizer(learning_rate)gradients = optimizer.compute_gradients(cost)clipped_gradients = [(tf.clip_by_value(grad, -1., 1.), var) for grad, var in gradients if grad is not None]train_op = optimizer.apply_gradients(clipped_gradients)max_epoch = 500

with tf.Session(graph=train_graph) as sess:sess.run(tf.global_variables_initializer())try:loader = tf.train.Saver()loader.restore(sess, tf.train.latest_checkpoint('./checkpoints'))except Exception as exp:print("retrain model")saver = tf.train.Saver()dataGen.epoch =1while dataGen.epoch < max_epoch:output_x,output_label,src_sequence_length,dst_sequence_length=dataGen.next_train_batch()_, loss = sess.run([train_op, cost],{inputs: output_x,targets: output_label,learning_rate: lr,source_sequence_len: src_sequence_length,target_sequence_len: dst_sequence_length})if dataGen.train_batch_index % display_step == 0 and dataGen.train_batch_index > 0:output_x,output_label,src_sequence_length,dst_sequence_length=dataGen.next_test_batch()batch_train_logits = sess.run(inference_logits,{inputs: output_x,source_sequence_len: src_sequence_length,target_sequence_len: dst_sequence_length})print('Epoch {:>3} - Valid Loss: {:>6.4f}'.format(dataGen.epoch, loss))if dataGen.epoch % 30 ==0 :#每 30个epoch 保存一次saver.save(sess,"checkpoints/dev")# Save Modelsaver.save(sess, "checkpoints/dev")print('Model Trained and Saved')

主要是注意其中的decoder_train_layer的是如何把注意力集合进来的。

最核心的就是这三句:

attention_mechanism = tf.contrib.seq2seq.BahdanauAttention(num_units=rnn_size,memory=encoder_outputs,memory_sequence_length=source_sequence_len)#在原来rnn的基础上配上一层AttentionWrapperdecoder_cell = tf.contrib.seq2seq.AttentionWrapper(decoder_cell,attention_mechanism,attention_layer_size=rnn_size)#初始状态设置为encoder最后的输出状态training_decoder = tf.contrib.seq2seq.BasicDecoder(decoder_cell,training_helper,decoder_cell.zero_state(batch_size,dtype=tf.float32).clone(cell_state=encoder_states),output_layer)

其中,attention_mechanism那一句指明使用什么样的注意力。

decoder_cell=AttentionWrapper 将rnn cell与注意力机制结合

train_decoder=xx 中生成一个Decoder,

注意其中decoder_cell.zero_state(batch_size,dtype=tf.float32).clone(cell_state=encoder_states) 表示把encoder的最后一个状态作为decoder的初始状态。

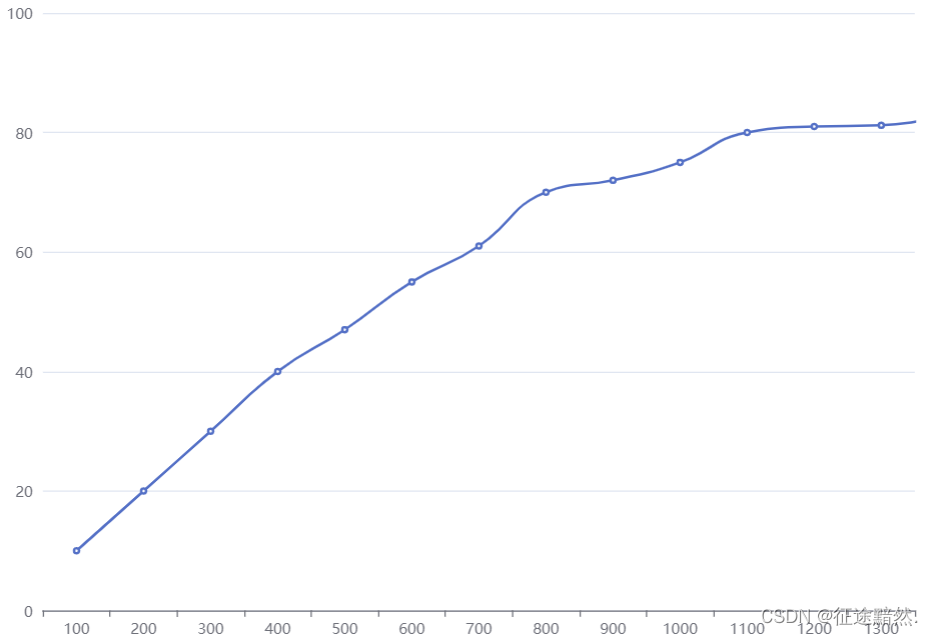

训练一波:

···

Epoch 324 - Valid Loss: 0.3102

Epoch 325 - Valid Loss: 0.3023

Epoch 326 - Valid Loss: 0.3137

Epoch 327 - Valid Loss: 0.2787

Epoch 328 - Valid Loss: 0.2124

Epoch 329 - Valid Loss: 0.2888

Epoch 330 - Valid Loss: 0.1924

Epoch 331 - Valid Loss: 0.1475

Epoch 332 - Valid Loss: 0.1174

Epoch 333 - Valid Loss: 0.1021

Epoch 334 - Valid Loss: 0.1094

Epoch 335 - Valid Loss: 0.1037

Epoch 336 - Valid Loss: 0.0923

Epoch 337 - Valid Loss: 0.0773

Epoch 338 - Valid Loss: 0.0755

Epoch 339 - Valid Loss: 0.0738

Epoch 340 - Valid Loss: 0.0703

Epoch 341 - Valid Loss: 0.0740

Epoch 342 - Valid Loss: 0.0670

···

Epoch 480 - Valid Loss: 0.0066

Epoch 481 - Valid Loss: 0.0065

Epoch 482 - Valid Loss: 0.0063

Epoch 483 - Valid Loss: 0.0062

Epoch 484 - Valid Loss: 0.0061

Epoch 485 - Valid Loss: 0.0060

Epoch 486 - Valid Loss: 0.0058

···

Epoch 497 - Valid Loss: 0.0048

Epoch 498 - Valid Loss: 0.0047

Epoch 499 - Valid Loss: 0.0047

Model Trained and Saved

特别要注意的是,因为手上的语料库很少。因此训练的时候会很缓慢,需要迭代300多个epoch 验证集的损失才会小于1。

预测逻辑

语料表示很多,测试效果不是特别好。

__author__ = 'jmh081701'

import tensorflow as tf

from utils import DATAPROCESSdataGen = DATAPROCESS(source_ling_path="data/cn.test.txt",dest_ling_path="data/en.test.txt",source_word_embedings_path="data/cn.txt.ebd.npy",source_vocb_path="data/cn.txt.vab",dest_word_embeddings_path="data/en.txt.ebd.npy",dest_vocb_path="data/en.txt.vab",batch_size=300,seperate_rate=1,)#所有的test里面的样本都拿去测试,seperate_rate 于是应该是100%,表示所有的样本都划分给测试用,训练部分为0.loaded_graph = tf.Graph()

with tf.Session(graph=loaded_graph) as sess:# Load saved modelloader = tf.train.import_meta_graph('checkpoints/dev.meta')loader.restore(sess, tf.train.latest_checkpoint('./checkpoints'))input_data = loaded_graph.get_tensor_by_name('inputs:0')logits = loaded_graph.get_tensor_by_name('predictions:0')target_sequence_length = loaded_graph.get_tensor_by_name('target_sequence_len:0')source_sequence_length = loaded_graph.get_tensor_by_name('source_sequence_len:0')print("inference begin ")output_x,output_label,src_sequence_length,dst_sequence_length=dataGen.test_data()print("inference")translate_logits=sess.run(fetches=logits,feed_dict={input_data:output_x,target_sequence_length:dst_sequence_length,source_sequence_length:src_sequence_length})for i in range(30):src=dataGen.src_id2words(output_x[i])dst=dataGen.tgt_id2words(translate_logits[i])print({"src":src})print({'dst':dst})print("Next Line")

预测结果:

{'src': '其三 , 发展 和 完善 金融 市场 , 提高 中国 金融 企业 资金 经营 水平 和 社会 资金 使用 效益 <END> <END> <END>'}

{'dst': '<START> third , we will develop and improve financial markets , and enhance the level of capital management and social capital efficiency of enterprises <END> <UNK> <UNK> <UNK> <UNK> <UNK>'}

Next Line

{'src': '参照 国际 上 金融 业务 综合 经营 趋势 , 逐步 完善 中国 金融业 分业 经营 、 分业 监管 的 体制 <END> <END> <END>'}

{'dst': '<START> following the trend of comprehensive management of international financial business , we must gradually improve the operation and management systems for separate sectors by the chinese industry <END> <UNK>'}

Next Line

{'src': '他 说 , 越共 “ 九 大 ” 的 各 项 准备 工作 已经 就绪 , 相信 大会 一定 会 取得 圆满 成功'}

{'dst': '<START> he said : various preparations for the upcoming congress have been under way and he is convinced that the congress will be a successful one <END> <UNK> <UNK> <UNK>'}

Next Line

{'src': '要 围绕 增强 城市 整体 功能 和 竞争力 , 把 上海 建设 成为 国际 经济 、 金融 、 贸易 和 航运 中心 之一'}

{'dst': '<START> it should focus its efforts on expanding overall urban functions and competitiveness and build itself into one of the international economic , financial , trade and shipping centers <END>'}

Next Line

https://github.com/jmhIcoding/machine_translation/tree/devolpe 具有工程代码