这几天用numpy实现了卷积神经网络,并在mnist数据集上进行了0-5五个数字的训练,全连接层的前向和反向写的肯定是对的,但卷积层不能确定,可能是对的.以后发现有错再改,卷积层在cpu上训练速度很慢,还有把代码里的Flatten换成GlobalAveragePooling以后试过一次好像错了,以后有机会再试一次

import numpy as np

import pandas as pd

from abc import ABCMeta, abstractmethod

import copy

def tanh(x):return np.tanh(x)

def tanh_derivative(x):return 1.0 - x * x

def sigmoid(x):return 1 / (1 + np.exp(-x))

def sigmoid_derivative(x):return x * (1 - x)

def relu(x):return np.maximum(x, 0)

def relu_derivative(x):t = copy.copy(x)#for i in range(len(t)):# if t[i] <= (1e-12):# t[i] = 0# else:# t[i] = 1t[t > 0] = 1return tclass ActivationFunc:def __init__(self):self.tdict = dict()self.tdict['tanh'] = np.tanhself.tdict['sigmoid'] = lambda x: 1 / (1 + np.exp(-x.clip(-40,40)))self.tdict['relu'] = reluself.tdict['softmax'] = lambda x: np.exp(x.clip(-40, 40))self.ddict = dict()self.ddict['tanh'] = tanh_derivativeself.ddict['sigmoid'] = sigmoid_derivativeself.ddict['relu'] = relu_derivativeself.ddict['softmax'] = lambda x: np.exp(x.clip(-40, 40))def getActivation(self, activation):if activation in self.tdict:return self.tdict[activation]else:return lambda x: xdef getDActivation(self, activation):if activation in self.ddict:return self.ddict[activation]else:return lambda x: np.ones(x.shape)class BaseLayer:def __init__(self):self.mintput = Noneself.moutput = Noneself.para = Noneself.bstep = 0self.grad = Noneself.activationFac = ActivationFunc()@abstractmethoddef mcompile(self):raise NotImplementedError@abstractmethoddef forward(self):raise NotImplementedError@abstractmethoddef backward(self):raise NotImplementedErrordef step(self, lr = 0.001, bcnt = 1, maxdiv = 1):a = 'doingnothing'#---- can be splitted into 2 files----class Conv2D(BaseLayer):def __init__(self, activation = 'relu', msize = 3, filters = 1, padding = 'same', strides = 1):BaseLayer.__init__(self)self.msize = msizeself.activation = self.activationFac.getActivation(activation)self.dactivation = self.activationFac.getDActivation(activation)self.minput = Noneself.moutput = Noneself.stride = int(strides)self.outputshape = Noneself.inputshape = Noneself.padding = paddingself.para = Noneself.grad = Noneself.backout = Noneself.mbias = Noneself.gbias = Noneself.filternum = filtersself.bstep = 0self.outputfunc = Falseself.validshape = Nonedef mcompile(self, val = None, inputshape = (1,), isoutput = False):self.validshape = inputshapeif self.padding == 'same':self.inputshape = (inputshape[0], (inputshape[1] + self.msize - 1) // self.stride * self.stride, (inputshape[2] + self.msize - 1) // self.stride * self.stride)else:self.inputshape = self.validshapeif val == None:val = np.sqrt(6 / (self.msize * self.msize * self.inputshape[0] * self.inputshape[1] * self.inputshape[2]))self.para = 2 * val * (np.random.rand(self.filternum, self.msize, self.msize) - 0.5)self.grad = np.zeros((self.filternum, self.msize, self.msize))self.mbias = (2 * val * (np.random.rand(self.filternum) - 0.5))self.gbias = np.zeros(self.filternum)self.minput = np.zeros(self.inputshape)self.outputshape = (self.filternum, (self.inputshape[1] - self.msize)//self.stride + 1, (self.inputshape[2] - self.msize)//self.stride + 1)self.moutput = np.zeros(self.outputshape)self.backout = np.zeros(self.inputshape)return self.outputshapedef forward(self, minput):self.minput[:, :minput.shape[1], :minput.shape[2]] = minputfor i in range(self.filternum):for j in range(0, (self.inputshape[1] - self.msize)//self.stride + 1):xstart = j * self.stridefor k in range(0, (self.inputshape[2] - self.msize)//self.stride + 1):ystart = k * self.strideself.moutput[i][j][k] = self.mbias[i]for i1 in range(0, self.inputshape[0]):self.moutput[i][j][k] += np.multiply(self.minput[i1][xstart:xstart + self.msize, ystart:ystart + self.msize], self.para[i]).sum()self.moutput = self.activation(self.moutput)return self.moutputdef backward(self, mloss):#print(self.moutput.shape, mloss.shape)xloss = self.dactivation(self.moutput) * mlosshend = ((self.inputshape[1] - self.msize)//self.stride + 1) * self.stridevend = ((self.inputshape[2] - self.msize)//self.stride + 1) * self.stride# without consider mloss[i] is full of zero'''for i in range(filters):for j in range(msize):for k in range(msize):for i1 in range(self.inputshape[0]):self.grad[i][j][k] += np.dot(mloss[i], self.minput[i1][j : hend + j: self.stride, k : vend + k : self.stride])self.backout.fill(0)for i in range(filters):for j in range(msize):for k in range(msize):for i1 in range(self.inputshape[0]):self.backout[i1][j: hend + j: self.stride, k: vend + k: self.stride] += self.para[i][j][k] * mloss[i]'''self.backout.fill(0)for i in range(self.filternum):for j in range(self.outputshape[1]):for k in range(self.outputshape[2]):if np.abs(xloss[i][j][k]) > 1e-12:xstart = j * self.strideystart = k * self.stridetloss = xloss[i][j][k]self.gbias[i] += tlossfor i1 in range(self.inputshape[0]):self.grad[i] += tloss * self.minput[i1][xstart: xstart + self.msize, ystart: ystart + self.msize]self.backout[i1][xstart:xstart + self.msize, ystart: ystart + self.msize] += tloss * self.para[i]return copy.copy(self.backout[:, :self.validshape[1], :self.validshape[2]])def step(self, lr = 0.001, bcnt = 1, maxdiv = 1):self.para -= lr * bcnt * self.grad / maxdivself.mbias -= lr * bcnt * self.gbias / maxdivself.grad.fill(0)self.gbias.fill(0)class Dense(BaseLayer):def __init__(self, activation = 'relu', msize = 1):BaseLayer.__init__(self)self.outputsize = msizeself.activation = self.activationFac.getActivation(activation)self.dactivation = self.activationFac.getDActivation(activation)self.activationname = activationself.para = Noneself.grad = Noneself.minput = Noneself.moutput = Noneself.mbias = Noneself.gbias = Noneself.isoutput = Falsedef mcompile(self, val = None, inputshape = (1,), isoutput = False):self.inputsize = inputshape[0]if val == None:val = np.sqrt(6/(self.inputsize + self.outputsize))self.para = 2 * val * (np.random.rand(self.inputsize, self.outputsize)- 0.5)self.grad = np.zeros((self.inputsize, self.outputsize))self.mbias = 2 * val * (np.random.rand(self.outputsize) - 0.5)self.gbias = np.zeros(self.outputsize)self.moutput = np.zeros(self.outputsize)self.isoutput = isoutputreturn self.moutput.shapedef forward(self, minput):self.minput = np.atleast_2d(minput)#print(self.minput.shape,self.para.shape,self.mbias.shape)self.moutput = self.activation(np.matmul(self.minput, self.para) + self.mbias) return self.moutputdef backward(self, mloss):if not self.isoutput:tloss = mloss * self.dactivation(self.moutput)else:tloss = mlossself.grad += np.matmul(self.minput.T, tloss)self.backout = np.matmul(tloss, self.para.T)self.gbias += np.squeeze(tloss, axis = 0)return self.backoutdef step(self, lr = 0.001, bcnt = 1, maxdiv = 1):self.para -= lr * bcnt * self.grad / maxdivself.mbias -= lr * bcnt * self.gbias / maxdivself.grad.fill(0)self.gbias.fill(0)class MaxPooling(BaseLayer):def __init__(self, msize = 2):BaseLayer.__init__(self)self.msize = int(msize)self.minput = Noneself.moutput = Noneself.backout = Noneself.maxid = Nonedef mcompile(self, inputshape = None, isoutput = False):self.inputsize = inputshapetmp = (self.inputsize[1] + self.msize - 1) // self.msizeself.outputsize = (self.inputsize[0], tmp, tmp) self.moutput = np.zeros(self.outputsize)self.maxid = np.zeros(self.outputsize)self.backout = np.zeros(self.inputsize)return self.outputsizedef forward(self, minput):self.minput = minputself.moutput.fill(0)for i in range(self.outputsize[0]): for j in range(self.outputsize[1]):for k in range(self.outputsize[2]):tmax = 0tmaxid = 0for j1 in range(self.msize):if j * self.msize + j1 == self.inputsize[1]:breakfor k1 in range(self.msize):if k * self.msize + k1 == self.inputsize[2]:breakif self.minput[i][j * self.msize + j1][k * self.msize + k1] > tmax:tmax = self.minput[i][j * self.msize + j1][k * self.msize + k1]tmaxid = j1 * self.msize + k1self.maxid[i][j][k] = tmaxid self.moutput[i][j][k] = tmaxreturn self.moutputdef backward(self, mloss):self.backout.fill(0)for i in range(self.outputsize[0]):for j in range(self.outputsize[1]):for k in range(self.outputsize[2]):tloss = mloss[i][j][k]if np.abs(tloss) > 1e-12:xid = int(self.maxid[i][j][k]) // self.msizeyid = int(self.maxid[i][j][k]) % self.msize#print(j * self.msize + xid, k * self.msize + yid)self.backout[i][j * self.msize + xid][k * self.msize + yid] = tlossreturn self.backoutclass GlobalAveragePooling(BaseLayer):def __init__(self):BaseLayer.__init__(self)self.minput = Noneself.moutput = Noneself.backout = Noneself.inputsize = Noneself.outputsize = Nonedef mcompile(self, inputshape = None, isoutput = False):self.inputsize = inputshapeself.outputsize = (self.inputsize[0], )self.moutput = np.zeros(self.outputsize)self.backout = np.zeros(self.inputsize)return self.outputsizedef forward(self, minput):self.minput = minputfor i in range(self.outputsize[0]):self.moutput[i] = minput[i].mean()return self.moutputdef backward(self, mloss):for i in range(self.outputsize[0]):self.backout[i] = self.minput[i] * mloss[0][i] / (self.inputsize[1] * self.inputsize[2])return self.backoutclass Flatten(BaseLayer):def __init__(self):BaseLayer.__init__(self)self.minput = Noneself.moutput = Noneself.backout = Noneself.inputsize = Noneself.outputsize = Nonedef mcompile(self, inputshape = None, isoutput = False):self.inputsize = inputshapeself.outputsize = (self.inputsize[0]*self.inputsize[1] * self.inputsize[2], )self.moutput = np.zeros(self.outputsize)self.backout = np.zeros(self.inputsize)return self.outputsizedef forward(self, minput):self.minput = minput#for i in range(self.outputsize[0]):self.moutput = minput.flatten()return self.moutputdef backward(self, mloss):self.backout = mloss.reshape(self.inputsize)return self.backoutclass CNetwork:def __init__(self, inputsize):self.layerlist = []self.moutput = Noneself.inputsize = inputsizeself.outputfunc = Noneself.bstep = 0self.lr = 0.001def mcompile(self, lr = 0.001):nowinputshape = self.inputsize#print(nowinputshape)for layer in self.layerlist:flag = layer is self.layerlist[-1]nowinputshape = layer.mcompile(inputshape = nowinputshape, isoutput = flag)#print(nowinputshape)self.outputfunc = self.layerlist[-1].activationnameself.lr = lrdef add(self, nowlayer):self.layerlist.append(nowlayer)def forward(self, minput):for eachlayer in self.layerlist:minput = eachlayer.forward(minput)return copy.copy(minput)def backward(self, y, y_label):self.maxnum = 0.001self.bstep += 1loss = copy.copy(y)if self.outputfunc == 'softmax':tsumy = sum(y)loss[y_label] -= tsumyloss /= max(tsumy, 1e-4)elif self.outputfunc == 'sigmoid':if y_label == 1:loss -= 1loss = np.atleast_2d(loss)#print(loss)for layer in reversed(self.layerlist):loss = layer.backward(loss)def step(self):mdiv = 0for layer in self.layerlist:if layer.grad is not None:mdiv = max(mdiv, np.abs(layer.grad).max())mdiv = max(mdiv, layer.gbias.max())for layer in self.layerlist:layer.step(lr = self.lr, bcnt = self.bstep, maxdiv = max(mdiv // 10, 1))self.bstep = 0def predict(self, minput):predictions = self.forward(minput)res = np.argmax(predictions[0])return resif __name__ == "__main__":#model = CNetwork(inputsize = (784,))model = CNetwork(inputsize = (1, 28, 28))model.add(Conv2D(filters = 6, msize = 3))model.add(MaxPooling(msize = 2))model.add(Conv2D(filters = 16, msize = 3))model.add(MaxPooling(msize = 2))model.add(Flatten())model.add(Dense(msize = 64))model.add(Dense(msize = 5, activation = 'softmax'))model.mcompile(lr = 0.001)x_train = np.load('mnist/x_train.npy')y_train = np.load('mnist/y_train.npy')x_test = np.load('mnist/x_test.npy')y_test = np.load('mnist/y_test.npy')'''epochs = 4for e in range(epochs):for i in range(len(x_train)):if y_train[i] > 1:continuemoutput = model.forward(x_train[i].reshape(784, ))#print(moutput, y_train[i])model.backward(moutput, y_train[i])if i % 10 == 9:model.step()tcnt = 0tot = 0for i in range(len(x_test)):if y_test[i] < 2:tot += 1tmp = model.forward(x_test[i].reshape(784,))if int(tmp > 0.5) == y_test[i]:tcnt += 1print('epoch {},Accuracy {}%'.format(e+1,tcnt / tot * 100))'''epochs = 1for e in range(epochs):tot = 0for i in range(len(x_train)//2):if y_train[i] >= 5:continuemoutput = model.forward(np.expand_dims(x_train[i], axis = 0))print(moutput, y_train[i])model.backward(np.squeeze(moutput, axis = 0), y_train[i])#if i > len(x_train)//2 - 20:#for i in range(2):# print(model.layerlist[i].backout)#print()#if i % 10 == 9:if tot % 5 == 4:model.step()tot += 1tcnt = 0tot = 0val_loss = 0for i in range(len(x_test//2)):if y_test[i] < 5:tot += 1tmp = model.forward(np.expand_dims(x_test[i],axis = 0))tx = np.argmax(tmp[0])val_loss += min(-np.log(tmp[0][y_test[i]]/tmp[0].sum()), 100)if tx == y_test[i]:tcnt += 1val_loss /= totprint('epoch {},Accuracy {}%,val_loss {}'.format(e + 1, tcnt / tot * 100, val_loss))'''model = CNetwork(inputsize = (2,))model.add(Dense(msize = 16))model.add(Dense(msize = 8))model.add(Dense(msize = 1,activation = 'sigmoid'))model.mcompile(lr = 0.001)print(model.outputfunc)X = np.array([[0,0],[0,1],[1,0],[1,1]])y = np.array([0, 1, 1, 0])for i in range(10000):for j in range(4):moutput = model.forward(np.expand_dims(X[j], axis = 0))#print(moutput)model.backward(np.squeeze(moutput, axis = 0), y[j])model.step()for j in range(4):print(model.forward(X[j]))'''结果最后几行

其中Accuracy为在测试集上的准确率,数组里的为softmax输出结果,归一化以后为预测概率

后面发现忘记了归一化,把flatten改成GlobalAveragepooling又运行了一次,其中权重的初始化值的选择很重要

import numpy as np

import pandas as pd

from abc import ABCMeta, abstractmethod

import copy

def tanh(x):return np.tanh(x)

def tanh_derivative(x):return 1.0 - x * x

def sigmoid(x):return 1 / (1 + np.exp(-x))

def sigmoid_derivative(x):return x * (1 - x)

def relu(x):return np.maximum(x, 0)

def relu_derivative(x):t = copy.copy(x)#for i in range(len(t)):# if t[i] <= (1e-12):# t[i] = 0# else:# t[i] = 1t[t > 0] = 1return tclass ActivationFunc:def __init__(self):self.tdict = dict()self.tdict['tanh'] = np.tanhself.tdict['sigmoid'] = lambda x: 1 / (1 + np.exp(-x.clip(-40,40)))self.tdict['relu'] = reluself.tdict['softmax'] = lambda x: np.exp(x.clip(-40, 40))self.ddict = dict()self.ddict['tanh'] = tanh_derivativeself.ddict['sigmoid'] = sigmoid_derivativeself.ddict['relu'] = relu_derivativeself.ddict['softmax'] = lambda x: np.exp(x.clip(-40, 40))def getActivation(self, activation):if activation in self.tdict:return self.tdict[activation]else:return lambda x: xdef getDActivation(self, activation):if activation in self.ddict:return self.ddict[activation]else:return lambda x: np.ones(x.shape)class BaseLayer:def __init__(self):self.mintput = Noneself.moutput = Noneself.para = Noneself.bstep = 0self.grad = Noneself.activationFac = ActivationFunc()@abstractmethoddef mcompile(self):raise NotImplementedError@abstractmethoddef forward(self):raise NotImplementedError@abstractmethoddef backward(self):raise NotImplementedErrordef step(self, lr = 0.001, bcnt = 1, maxdiv = 1):a = 'doingnothing'#---- can be splitted into 2 files----class Conv2D(BaseLayer):def __init__(self, activation = 'relu', msize = 3, filters = 1, padding = 'same', strides = 1):BaseLayer.__init__(self)self.msize = msizeself.activation = self.activationFac.getActivation(activation)self.dactivation = self.activationFac.getDActivation(activation)self.minput = Noneself.moutput = Noneself.stride = int(strides)self.outputshape = Noneself.inputshape = Noneself.padding = paddingself.para = Noneself.grad = Noneself.backout = Noneself.mbias = Noneself.gbias = Noneself.filternum = filtersself.bstep = 0self.outputfunc = Falseself.validshape = Nonedef mcompile(self, val = None, inputshape = (1,), isoutput = False):self.validshape = inputshapeif self.padding == 'same':self.inputshape = (inputshape[0], (inputshape[1] + self.msize - 1) // self.stride * self.stride, (inputshape[2] + self.msize - 1) // self.stride * self.stride)else:self.inputshape = self.validshapeif val == None:val = np.sqrt(6 / ( self.inputshape[0] * self.msize * self.msize))self.para = 2 * val * (np.random.rand(self.filternum, self.msize, self.msize) - 0.5)self.grad = np.zeros((self.filternum, self.msize, self.msize))self.mbias = (2 * val * (np.random.rand(self.filternum) - 0.5))self.gbias = np.zeros(self.filternum)self.minput = np.zeros(self.inputshape)self.outputshape = (self.filternum, (self.inputshape[1] - self.msize)//self.stride + 1, (self.inputshape[2] - self.msize)//self.stride + 1)self.moutput = np.zeros(self.outputshape)self.backout = np.zeros(self.inputshape)return self.outputshapedef forward(self, minput):self.minput[:, :minput.shape[1], :minput.shape[2]] = minputfor i in range(self.filternum):for j in range(0, (self.inputshape[1] - self.msize)//self.stride + 1):xstart = j * self.stridefor k in range(0, (self.inputshape[2] - self.msize)//self.stride + 1):ystart = k * self.strideself.moutput[i][j][k] = self.mbias[i]for i1 in range(0, self.inputshape[0]):self.moutput[i][j][k] += np.multiply(self.minput[i1][xstart:xstart + self.msize, ystart:ystart + self.msize], self.para[i]).sum()self.moutput = self.activation(self.moutput)return self.moutputdef backward(self, mloss):#print(self.moutput.shape, mloss.shape)xloss = self.dactivation(self.moutput) * mlosshend = ((self.inputshape[1] - self.msize)//self.stride + 1) * self.stridevend = ((self.inputshape[2] - self.msize)//self.stride + 1) * self.stride# without consider mloss[i] is full of zero'''for i in range(filters):for j in range(msize):for k in range(msize):for i1 in range(self.inputshape[0]):self.grad[i][j][k] += np.dot(mloss[i], self.minput[i1][j : hend + j: self.stride, k : vend + k : self.stride])self.backout.fill(0)for i in range(filters):for j in range(msize):for k in range(msize):for i1 in range(self.inputshape[0]):self.backout[i1][j: hend + j: self.stride, k: vend + k: self.stride] += self.para[i][j][k] * mloss[i]'''self.backout.fill(0)for i in range(self.filternum):for j in range(self.outputshape[1]):for k in range(self.outputshape[2]):if np.abs(xloss[i][j][k]) > 1e-12:xstart = j * self.strideystart = k * self.stridetloss = xloss[i][j][k]self.gbias[i] += tlossfor i1 in range(self.inputshape[0]):self.grad[i] += tloss * self.minput[i1][xstart: xstart + self.msize, ystart: ystart + self.msize]self.backout[i1][xstart:xstart + self.msize, ystart: ystart + self.msize] += tloss * self.para[i]return copy.copy(self.backout[:, :self.validshape[1], :self.validshape[2]])def step(self, lr = 0.001, bcnt = 1, maxdiv = 1):self.para -= lr * bcnt * self.grad / maxdivself.mbias -= lr * bcnt * self.gbias / maxdivself.grad.fill(0)self.gbias.fill(0)class Dense(BaseLayer):def __init__(self, activation = 'relu', msize = 1):BaseLayer.__init__(self)self.outputsize = msizeself.activation = self.activationFac.getActivation(activation)self.dactivation = self.activationFac.getDActivation(activation)self.activationname = activationself.para = Noneself.grad = Noneself.minput = Noneself.moutput = Noneself.mbias = Noneself.gbias = Noneself.isoutput = Falsedef mcompile(self, val = None, inputshape = (1,), isoutput = False):self.inputsize = inputshape[0]if val == None:val = np.sqrt(6/(self.inputsize + self.outputsize))self.para = 2 * val * (np.random.rand(self.inputsize, self.outputsize)- 0.5)self.grad = np.zeros((self.inputsize, self.outputsize))self.mbias = 2 * val * (np.random.rand(self.outputsize) - 0.5)self.gbias = np.zeros(self.outputsize)self.moutput = np.zeros(self.outputsize)self.isoutput = isoutputreturn self.moutput.shapedef forward(self, minput):self.minput = np.atleast_2d(minput)#print(self.minput.shape,self.para.shape,self.mbias.shape)self.moutput = self.activation(np.matmul(self.minput, self.para) + self.mbias) return self.moutputdef backward(self, mloss):if not self.isoutput:tloss = mloss * self.dactivation(self.moutput)else:tloss = mlossself.grad += np.matmul(self.minput.T, tloss)self.backout = np.matmul(tloss, self.para.T)self.gbias += np.squeeze(tloss, axis = 0)return self.backoutdef step(self, lr = 0.001, bcnt = 1, maxdiv = 1):self.para -= lr * bcnt * self.grad / maxdivself.mbias -= lr * bcnt * self.gbias / maxdivself.grad.fill(0)self.gbias.fill(0)class MaxPooling(BaseLayer):def __init__(self, msize = 2):BaseLayer.__init__(self)self.msize = int(msize)self.minput = Noneself.moutput = Noneself.backout = Noneself.maxid = Nonedef mcompile(self, inputshape = None, isoutput = False):self.inputsize = inputshapetmp = (self.inputsize[1] + self.msize - 1) // self.msizeself.outputsize = (self.inputsize[0], tmp, tmp) self.moutput = np.zeros(self.outputsize)self.maxid = np.zeros(self.outputsize)self.backout = np.zeros(self.inputsize)return self.outputsizedef forward(self, minput):self.minput = minputself.moutput.fill(0)for i in range(self.outputsize[0]): for j in range(self.outputsize[1]):for k in range(self.outputsize[2]):tmax = 0tmaxid = 0for j1 in range(self.msize):if j * self.msize + j1 == self.inputsize[1]:breakfor k1 in range(self.msize):if k * self.msize + k1 == self.inputsize[2]:breakif self.minput[i][j * self.msize + j1][k * self.msize + k1] > tmax:tmax = self.minput[i][j * self.msize + j1][k * self.msize + k1]tmaxid = j1 * self.msize + k1self.maxid[i][j][k] = tmaxid self.moutput[i][j][k] = tmaxreturn self.moutputdef backward(self, mloss):self.backout.fill(0)for i in range(self.outputsize[0]):for j in range(self.outputsize[1]):for k in range(self.outputsize[2]):tloss = mloss[i][j][k]if np.abs(tloss) > 1e-12:xid = int(self.maxid[i][j][k]) // self.msizeyid = int(self.maxid[i][j][k]) % self.msize#print(j * self.msize + xid, k * self.msize + yid)self.backout[i][j * self.msize + xid][k * self.msize + yid] = tlossreturn self.backoutclass GlobalAveragePooling(BaseLayer):def __init__(self):BaseLayer.__init__(self)self.minput = Noneself.moutput = Noneself.backout = Noneself.inputsize = Noneself.outputsize = Nonedef mcompile(self, inputshape = None, isoutput = False):self.inputsize = inputshapeself.outputsize = (self.inputsize[0], )self.moutput = np.zeros(self.outputsize)self.backout = np.zeros(self.inputsize)return self.outputsizedef forward(self, minput):self.minput = minputfor i in range(self.outputsize[0]):self.moutput[i] = minput[i].mean()return self.moutputdef backward(self, mloss):for i in range(self.outputsize[0]):self.backout[i] = self.minput[i] * mloss[0][i] / (self.inputsize[1] * self.inputsize[2])return self.backoutclass Flatten(BaseLayer):def __init__(self):BaseLayer.__init__(self)self.minput = Noneself.moutput = Noneself.backout = Noneself.inputsize = Noneself.outputsize = Nonedef mcompile(self, inputshape = None, isoutput = False):self.inputsize = inputshapeself.outputsize = (self.inputsize[0]*self.inputsize[1] * self.inputsize[2], )self.moutput = np.zeros(self.outputsize)self.backout = np.zeros(self.inputsize)return self.outputsizedef forward(self, minput):self.minput = minput#for i in range(self.outputsize[0]):self.moutput = minput.flatten()return self.moutputdef backward(self, mloss):self.backout = mloss.reshape(self.inputsize)return self.backoutclass CNetwork:def __init__(self, inputsize):self.layerlist = []self.moutput = Noneself.inputsize = inputsizeself.outputfunc = Noneself.bstep = 0self.lr = 0.001def mcompile(self, lr = 0.001):nowinputshape = self.inputsize#print(nowinputshape)for layer in self.layerlist:flag = layer is self.layerlist[-1]nowinputshape = layer.mcompile(inputshape = nowinputshape, isoutput = flag)#print(nowinputshape)self.outputfunc = self.layerlist[-1].activationnameself.lr = lrdef add(self, nowlayer):self.layerlist.append(nowlayer)def forward(self, minput):for eachlayer in self.layerlist:minput = eachlayer.forward(minput)return copy.copy(minput)def backward(self, y, y_label):self.maxnum = 0.001self.bstep += 1loss = copy.copy(y)if self.outputfunc == 'softmax':tsumy = sum(y)loss[y_label] -= tsumyloss /= max(tsumy, 1e-4)elif self.outputfunc == 'sigmoid':if y_label == 1:loss -= 1loss = np.atleast_2d(loss)#print(loss)for layer in reversed(self.layerlist):loss = layer.backward(loss)def step(self):mdiv = 0for layer in self.layerlist:if layer.grad is not None:mdiv = max(mdiv, np.abs(layer.grad).max())mdiv = max(mdiv, layer.gbias.max())for layer in self.layerlist:layer.step(lr = self.lr, bcnt = self.bstep, maxdiv = max(mdiv // 1000, 1))self.bstep = 0def predict(self, minput):predictions = self.forward(minput)res = np.argmax(predictions[0])return resif __name__ == "__main__":#model = CNetwork(inputsize = (784,))model = CNetwork(inputsize = (1, 28, 28))model.add(Conv2D(filters = 8, msize = 5))model.add(MaxPooling(msize = 2))model.add(Conv2D(filters = 20, msize = 5))model.add(MaxPooling(msize = 2))#model.add(GlobalAveragePooling())model.add(Flatten())model.add(Dense(msize = 64))model.add(Dense(msize = 10, activation = 'softmax'))model.mcompile(lr = 0.0001)x_train = np.load('mnist/x_train.npy') / 255y_train = np.load('mnist/y_train.npy')x_test = np.load('mnist/x_test.npy') / 255y_test = np.load('mnist/y_test.npy')#print(x_train[0])'''epochs = 4for e in range(epochs):for i in range(len(x_train)):if y_train[i] > 1:continuemoutput = model.forward(x_train[i].reshape(784, ))#print(moutput, y_train[i])model.backward(moutput, y_train[i])if i % 10 == 9:model.step()tcnt = 0tot = 0for i in range(len(x_test)):if y_test[i] < 2:tot += 1tmp = model.forward(x_test[i].reshape(784,))if int(tmp > 0.5) == y_test[i]:tcnt += 1print('epoch {},Accuracy {}%'.format(e+1,tcnt / tot * 100))'''epochs = 2for e in range(epochs):tot = 0for i in range(len(x_train)):# if y_train[i] >= 5:# continuemoutput = model.forward(np.expand_dims(x_train[i], axis = 0))print("case {}:".format(tot + 1), moutput, y_train[i])model.backward(np.squeeze(moutput, axis = 0), y_train[i])#if i > len(x_train)//2 - 20:#for i in range(2):# print(model.layerlist[i].backout)#print()#if i % 10 == 9:if tot % 10 == 9:model.step()tot += 1tcnt = 0tot = 0val_loss = 0for i in range(len(x_test)):#if y_test[i] < 5:tot += 1tmp = model.forward(np.expand_dims(x_test[i],axis = 0))tx = np.argmax(tmp[0])val_loss += min(-np.log(tmp[0][y_test[i]]/tmp[0].sum()), 100)if tx == y_test[i]:tcnt += 1val_loss /= totprint('epoch {},Accuracy {}%,val_loss {}'.format(e + 1, tcnt / tot * 100, val_loss))'''model = CNetwork(inputsize = (2,))model.add(Dense(msize = 16))model.add(Dense(msize = 8))model.add(Dense(msize = 1,activation = 'sigmoid'))model.mcompile(lr = 0.001)print(model.outputfunc)X = np.array([[0,0],[0,1],[1,0],[1,1]])y = np.array([0, 1, 1, 0])for i in range(10000):for j in range(4):moutput = model.forward(np.expand_dims(X[j], axis = 0))#print(moutput)model.backward(np.squeeze(moutput, axis = 0), y[j])model.step()for j in range(4):print(model.forward(X[j]))'''结果暂时还没有跑出来

发现这么小的网络好像GlobalAverage不如Flatten训练效果好

上面这个是最终版本的,我训练了一半,感觉效果很不错,感觉卷积层前向和反向用C++写总运行时间可以缩减为原来的1/20,如果有方法用gpu运行的话,可能又可以缩减很多运行时间,但是没有计算图的应该没法用gpu优化

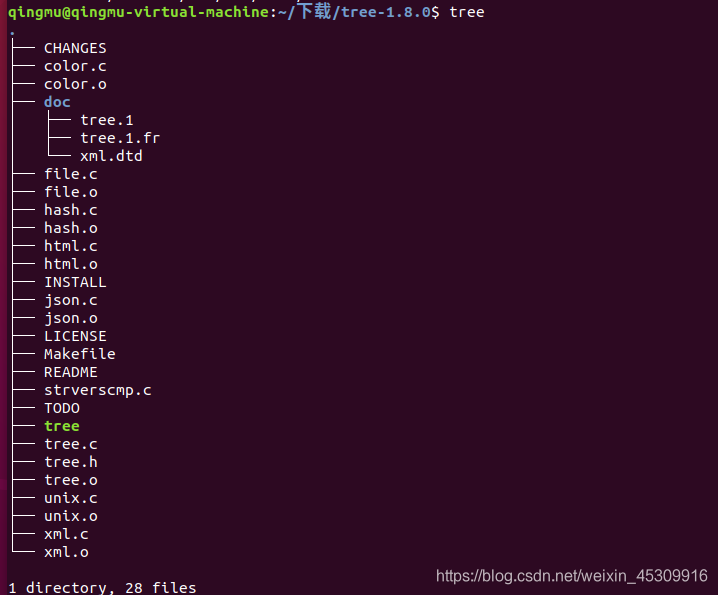

后面发现好像用C++优化不可行,因为传参大概就会花一秒钟,运行结果最后几行如下(运行一个epoch大概半天):

以下为带施密特正交化版本的代码:

import numpy as np

import pandas as pd

from scipy import linalg

from abc import ABCMeta, abstractmethod

import copy

def tanh(x):return np.tanh(x)

def tanh_derivative(x):return 1.0 - x * x

def sigmoid(x):return 1 / (1 + np.exp(-x))

def sigmoid_derivative(x):return x * (1 - x)

def relu(x):return np.maximum(x, 0)

def relu_derivative(x):t = copy.copy(x)#for i in range(len(t)):# if t[i] <= (1e-12):# t[i] = 0# else:# t[i] = 1t[t > 0] = 1return tclass ActivationFunc:def __init__(self):self.tdict = dict()self.tdict['tanh'] = np.tanhself.tdict['sigmoid'] = lambda x: 1 / (1 + np.exp(-x.clip(-40,40)))self.tdict['relu'] = reluself.tdict['softmax'] = lambda x: np.exp(x.clip(-40, 40))self.ddict = dict()self.ddict['tanh'] = tanh_derivativeself.ddict['sigmoid'] = sigmoid_derivativeself.ddict['relu'] = relu_derivativeself.ddict['softmax'] = lambda x: np.exp(x.clip(-40, 40))def getActivation(self, activation):if activation in self.tdict:return self.tdict[activation]else:return lambda x: xdef getDActivation(self, activation):if activation in self.ddict:return self.ddict[activation]else:return lambda x: np.ones(x.shape)class BaseLayer:def __init__(self):self.mintput = Noneself.moutput = Noneself.para = Noneself.bstep = 0self.grad = Noneself.activationFac = ActivationFunc()@abstractmethoddef mcompile(self):raise NotImplementedError@abstractmethoddef forward(self):raise NotImplementedError@abstractmethoddef backward(self):raise NotImplementedErrordef getlen(self, vec):return np.sqrt(np.dot(vec, vec))def myschmitt(self, minput):for i in range(len(minput)):orglen = self.getlen(minput[i])for j in range(i):minput[i] -= np.dot(minput[j], minput[i])/np.dot(minput[i], minput[i]) * minput[j]minput[i] *= (orglen / self.getlen(minput[i]))return minputdef step(self, lr = 0.001, bcnt = 1, maxdiv = 1):a = 'doingnothing'#---- can be splitted into 2 files----class Conv2D(BaseLayer):def __init__(self, activation = 'relu', msize = 3, filters = 1, padding = 'same', strides = 1):BaseLayer.__init__(self)self.msize = msizeself.activation = self.activationFac.getActivation(activation)self.dactivation = self.activationFac.getDActivation(activation)self.minput = Noneself.moutput = Noneself.stride = int(strides)self.outputshape = Noneself.inputshape = Noneself.padding = paddingself.para = Noneself.grad = Noneself.backout = Noneself.mbias = Noneself.gbias = Noneself.filternum = filtersself.bstep = 0self.outputfunc = Falseself.validshape = Nonedef mcompile(self, val = None, inputshape = (1,), isoutput = False):self.validshape = inputshapeif self.padding == 'same':self.inputshape = (inputshape[0], (inputshape[1] + self.msize - 1) // self.stride * self.stride, (inputshape[2] + self.msize - 1) // self.stride * self.stride)else:self.inputshape = self.validshapeif val == None:val = np.sqrt(6 / (self.msize * self.msize))self.para = 2 * val * (np.random.rand(self.filternum, inputshape[0] * self.msize * self.msize) - 0.5)if self.para.shape[0] <= self.para.shape[1]:self.para = self.myschmitt(self.para).reshape(self.filternum, inputshape[0], self.msize, self.msize)else:self.para = self.para.reshape(self.filternum, inputshape[0], self.msize, self.msize)#self.para *= valself.grad = np.zeros((self.filternum, inputshape[0], self.msize, self.msize))self.mbias = (2 * val * (np.random.rand(self.filternum) - 0.5))self.gbias = np.zeros(self.filternum)self.minput = np.zeros(self.inputshape)self.outputshape = (self.filternum, (self.inputshape[1] - self.msize)//self.stride + 1, (self.inputshape[2] - self.msize)//self.stride + 1)self.moutput = np.zeros(self.outputshape)self.backout = np.zeros(self.inputshape)return self.outputshapedef forward(self, minput):self.minput[:, :minput.shape[1], :minput.shape[2]] = minputfor i in range(self.filternum):for j in range(0, (self.inputshape[1] - self.msize)//self.stride + 1, self.stride):xstart = j * self.stridefor k in range(0, (self.inputshape[2] - self.msize)//self.stride + 1, self.stride):ystart = k * self.strideself.moutput[i][j][k] = self.mbias[i]for i1 in range(0, self.inputshape[0]):self.moutput[i][j][k] += np.multiply(self.minput[i1][xstart:xstart + self.msize, ystart:ystart + self.msize], self.para[i][i1]).sum()self.moutput = self.activation(self.moutput)return self.moutputdef backward(self, mloss):#print(self.moutput.shape, mloss.shape)xloss = self.dactivation(self.moutput) * mlosshend = ((self.inputshape[1] - self.msize)//self.stride + 1) * self.stridevend = ((self.inputshape[2] - self.msize)//self.stride + 1) * self.strideself.backout.fill(0)# without consider mloss[i] is full of zero'''oshape1 = self.outputshape[1]oshape2 = self.outputshape[2]for i in range(self.filternum):for i1 in range(self.inputshape[0]):for j in range(self.msize):for k in range(self.msize):self.grad[i][i1][j][k] += np.multiply(xloss[i], self.minput[i1][j: j+oshape1: self.stride, k: k+oshape2: self.stride]).sum()self.backout[i1][j: j+oshape1: self.stride, k: k+oshape2: self.stride] += xloss[i] * self.para[i][i1][j][k] '''for i in range(self.filternum):for j in range(self.outputshape[1]):for k in range(self.outputshape[2]):if np.abs(xloss[i][j][k]) > 1e-12:xstart = j * self.strideystart = k * self.stridetloss = xloss[i][j][k]self.gbias[i] += tlossfor i1 in range(self.inputshape[0]):self.grad[i][i1] += tloss * self.minput[i1][xstart: xstart + self.msize, ystart: ystart + self.msize]self.backout[i1][xstart:xstart + self.msize, ystart: ystart + self.msize] += tloss * self.para[i][i1]return copy.copy(self.backout[:, :self.validshape[1], :self.validshape[2]])def step(self, lr = 0.001, bcnt = 1, maxdiv = 1):self.para -= lr * bcnt * self.grad / maxdivself.mbias -= lr * bcnt * self.gbias / maxdivself.grad.fill(0)self.gbias.fill(0)class Dense(BaseLayer):def __init__(self, activation = 'relu', msize = 1):BaseLayer.__init__(self)self.outputsize = msizeself.activation = self.activationFac.getActivation(activation)self.dactivation = self.activationFac.getDActivation(activation)self.activationname = activationself.para = Noneself.grad = Noneself.minput = Noneself.moutput = Noneself.mbias = Noneself.gbias = Noneself.isoutput = Falsedef mcompile(self, val = None, inputshape = (1,), isoutput = False):self.inputsize = inputshape[0]if val == None:val = np.sqrt(6/(self.inputsize + self.outputsize))self.para = 2 * val * (np.random.rand(self.inputsize, self.outputsize)- 0.5)if self.para.shape[0] <= self.para.shape[1]:self.para = self.myschmitt(self.para)#self.para *= valself.grad = np.zeros((self.inputsize, self.outputsize))self.mbias = 2 * val * (np.random.rand(self.outputsize) - 0.5)self.gbias = np.zeros(self.outputsize)self.moutput = np.zeros(self.outputsize)self.isoutput = isoutputreturn self.moutput.shapedef forward(self, minput):self.minput = np.atleast_2d(minput)#print(self.minput.shape,self.para.shape,self.mbias.shape)self.moutput = self.activation(np.matmul(self.minput, self.para) + self.mbias) return self.moutputdef backward(self, mloss):if not self.isoutput:tloss = mloss * self.dactivation(self.moutput)else:tloss = mlossself.grad += np.matmul(self.minput.T, tloss)self.backout = np.matmul(tloss, self.para.T)self.gbias += np.squeeze(tloss, axis = 0)return self.backoutdef step(self, lr = 0.001, bcnt = 1, maxdiv = 1):self.para -= lr * bcnt * self.grad / maxdivself.mbias -= lr * bcnt * self.gbias / maxdivself.grad.fill(0)self.gbias.fill(0)class MaxPooling(BaseLayer):def __init__(self, msize = 2):BaseLayer.__init__(self)self.msize = int(msize)self.minput = Noneself.moutput = Noneself.backout = Noneself.maxid = Nonedef mcompile(self, inputshape = None, isoutput = False):self.inputsize = inputshapetmp = (self.inputsize[1] + self.msize - 1) // self.msizeself.outputsize = (self.inputsize[0], tmp, tmp) self.moutput = np.zeros(self.outputsize)self.maxid = np.zeros(self.outputsize)self.backout = np.zeros(self.inputsize)return self.outputsizedef forward(self, minput):self.minput = minputself.moutput.fill(0)for i in range(self.outputsize[0]): for j in range(self.outputsize[1]):for k in range(self.outputsize[2]):tmax = 0tmaxid = 0for j1 in range(self.msize):if j * self.msize + j1 == self.inputsize[1]:breakfor k1 in range(self.msize):if k * self.msize + k1 == self.inputsize[2]:breakif self.minput[i][j * self.msize + j1][k * self.msize + k1] > tmax:tmax = self.minput[i][j * self.msize + j1][k * self.msize + k1]tmaxid = j1 * self.msize + k1self.maxid[i][j][k] = tmaxid self.moutput[i][j][k] = tmaxreturn self.moutputdef backward(self, mloss):self.backout.fill(0)for i in range(self.outputsize[0]):for j in range(self.outputsize[1]):for k in range(self.outputsize[2]):tloss = mloss[i][j][k]if np.abs(tloss) > 1e-12:xid = int(self.maxid[i][j][k]) // self.msizeyid = int(self.maxid[i][j][k]) % self.msize#print(j * self.msize + xid, k * self.msize + yid)self.backout[i][j * self.msize + xid][k * self.msize + yid] = tlossreturn self.backoutclass GlobalAveragePooling(BaseLayer):def __init__(self):BaseLayer.__init__(self)self.minput = Noneself.moutput = Noneself.backout = Noneself.inputsize = Noneself.outputsize = Nonedef mcompile(self, inputshape = None, isoutput = False):self.inputsize = inputshapeself.outputsize = (self.inputsize[0], )self.moutput = np.zeros(self.outputsize)self.backout = np.zeros(self.inputsize)return self.outputsizedef forward(self, minput):self.minput = minputfor i in range(self.outputsize[0]):self.moutput[i] = minput[i].mean()return self.moutputdef backward(self, mloss):for i in range(self.outputsize[0]):self.backout[i] = self.minput[i] * mloss[0][i] / (self.inputsize[1] * self.inputsize[2])return self.backoutclass Flatten(BaseLayer):def __init__(self):BaseLayer.__init__(self)self.minput = Noneself.moutput = Noneself.backout = Noneself.inputsize = Noneself.outputsize = Nonedef mcompile(self, inputshape = None, isoutput = False):self.inputsize = inputshapeself.outputsize = (self.inputsize[0]*self.inputsize[1] * self.inputsize[2], )self.moutput = np.zeros(self.outputsize)self.backout = np.zeros(self.inputsize)return self.outputsizedef forward(self, minput):self.minput = minput#for i in range(self.outputsize[0]):self.moutput = minput.flatten()return self.moutputdef backward(self, mloss):self.backout = mloss.reshape(self.inputsize)return self.backoutclass CNetwork:def __init__(self, inputsize):self.layerlist = []self.moutput = Noneself.inputsize = inputsizeself.outputfunc = Noneself.bstep = 0self.lr = 0.001def mcompile(self, lr = 0.001):nowinputshape = self.inputsize#print(nowinputshape)for layer in self.layerlist:flag = layer is self.layerlist[-1]nowinputshape = layer.mcompile(inputshape = nowinputshape, isoutput = flag)#print(nowinputshape)self.outputfunc = self.layerlist[-1].activationnameself.lr = lrdef add(self, nowlayer):self.layerlist.append(nowlayer)def forward(self, minput):for eachlayer in self.layerlist:minput = eachlayer.forward(minput)return copy.copy(minput)def backward(self, y, y_label):self.maxnum = 0.001self.bstep += 1loss = copy.copy(y)if self.outputfunc == 'softmax':tsumy = sum(y)loss[y_label] -= tsumyloss /= max(tsumy, 1e-4)elif self.outputfunc == 'sigmoid':if y_label == 1:loss -= 1loss = np.atleast_2d(loss)#print(loss)for layer in reversed(self.layerlist):loss = layer.backward(loss)def step(self):mdiv = 0for layer in self.layerlist:if layer.grad is not None:mdiv = max(mdiv, np.abs(layer.grad).max())mdiv = max(mdiv, layer.gbias.max())for layer in self.layerlist:layer.step(lr = self.lr, bcnt = self.bstep, maxdiv = max(mdiv // 1000, 1))self.bstep = 0def predict(self, minput):predictions = self.forward(minput)res = np.argmax(predictions[0])return resif __name__ == "__main__":#model = CNetwork(inputsize = (784,))model = CNetwork(inputsize = (1, 28, 28))model.add(Conv2D(filters = 6, msize = 5))model.add(MaxPooling(msize = 2))model.add(Conv2D(filters = 16, msize = 5))model.add(MaxPooling(msize = 2))#model.add(GlobalAveragePooling())model.add(Flatten())#model.add(Dense(msize = 256))model.add(Dense(msize = 64))model.add(Dense(msize = 10, activation = 'softmax'))model.mcompile(lr = 0.0001)x_train = np.load('mnist/x_train.npy') / 255y_train = np.load('mnist/y_train.npy')x_test = np.load('mnist/x_test.npy') / 255y_test = np.load('mnist/y_test.npy')#print(x_train.shape)#print(x_test.shape)'''epochs = 4for e in range(epochs):for i in range(len(x_train)):if y_train[i] > 1:continuemoutput = model.forward(x_train[i].reshape(784, ))#print(moutput, y_train[i])model.backward(moutput, y_train[i])if i % 10 == 9:model.step()tcnt = 0tot = 0for i in range(len(x_test)):if y_test[i] < 2:tot += 1tmp = model.forward(x_test[i].reshape(784,))if int(tmp > 0.5) == y_test[i]:tcnt += 1print('epoch {},Accuracy {}%'.format(e+1,tcnt / tot * 100))'''epochs = 1for e in range(epochs):tot = 0for i in range(len(x_train)):# if y_train[i] >= 5:# continuemoutput = model.forward(np.expand_dims(x_train[i], axis = 0))print("case {}:".format(tot + 1), moutput, y_train[i])model.backward(np.squeeze(moutput, axis = 0), y_train[i])#if i > len(x_train)//2 - 20:#for i in range(2):# print(model.layerlist[i].backout)#print()#if i % 10 == 9:if tot % 5 == 4:model.step()tot += 1tcnt = 0tot = 0val_loss = 0for i in range(len(x_test)):#if y_test[i] < 5:tot += 1tmp = model.forward(np.expand_dims(x_test[i],axis = 0))tx = np.argmax(tmp[0])val_loss += min(-np.log(tmp[0][y_test[i]]/tmp[0].sum()), 100)if tx == y_test[i]:tcnt += 1val_loss /= totprint('epoch {},Accuracy {}%,val_loss {}'.format(e + 1, tcnt / tot * 100, val_loss))'''model = CNetwork(inputsize = (2,))model.add(Dense(msize = 16))model.add(Dense(msize = 8))model.add(Dense(msize = 1,activation = 'sigmoid'))model.mcompile(lr = 0.001)print(model.outputfunc)X = np.array([[0,0],[0,1],[1,0],[1,1]])y = np.array([0, 1, 1, 0])for i in range(10000):for j in range(4):moutput = model.forward(np.expand_dims(X[j], axis = 0))#print(moutput)model.backward(np.squeeze(moutput, axis = 0), y[j])model.step()for j in range(4):print(model.forward(X[j]))'''后面发现参数第一维比第二维高的时候好像不需要正交化,否则最后几个向量都会变成0

理论上效果更好,但实际效果好像并不是很好(后有修改,准确率可以达到94点几)

突然发现卷积层的参数初始化好像小了,应该初始化大一些.

运行结果最后几行如下:

跑这些程序对电脑性能没有任何要求,唯一的缺点是电脑不能关